Clusters¶

Overview¶

Infrastructure > Clusters is for creating and managing Kubernetes Clusters, Morpheus manager Docker Clusters, KVM Clusters, or Cloud specific Kubernetes services such as EKS, AKS and GKE.

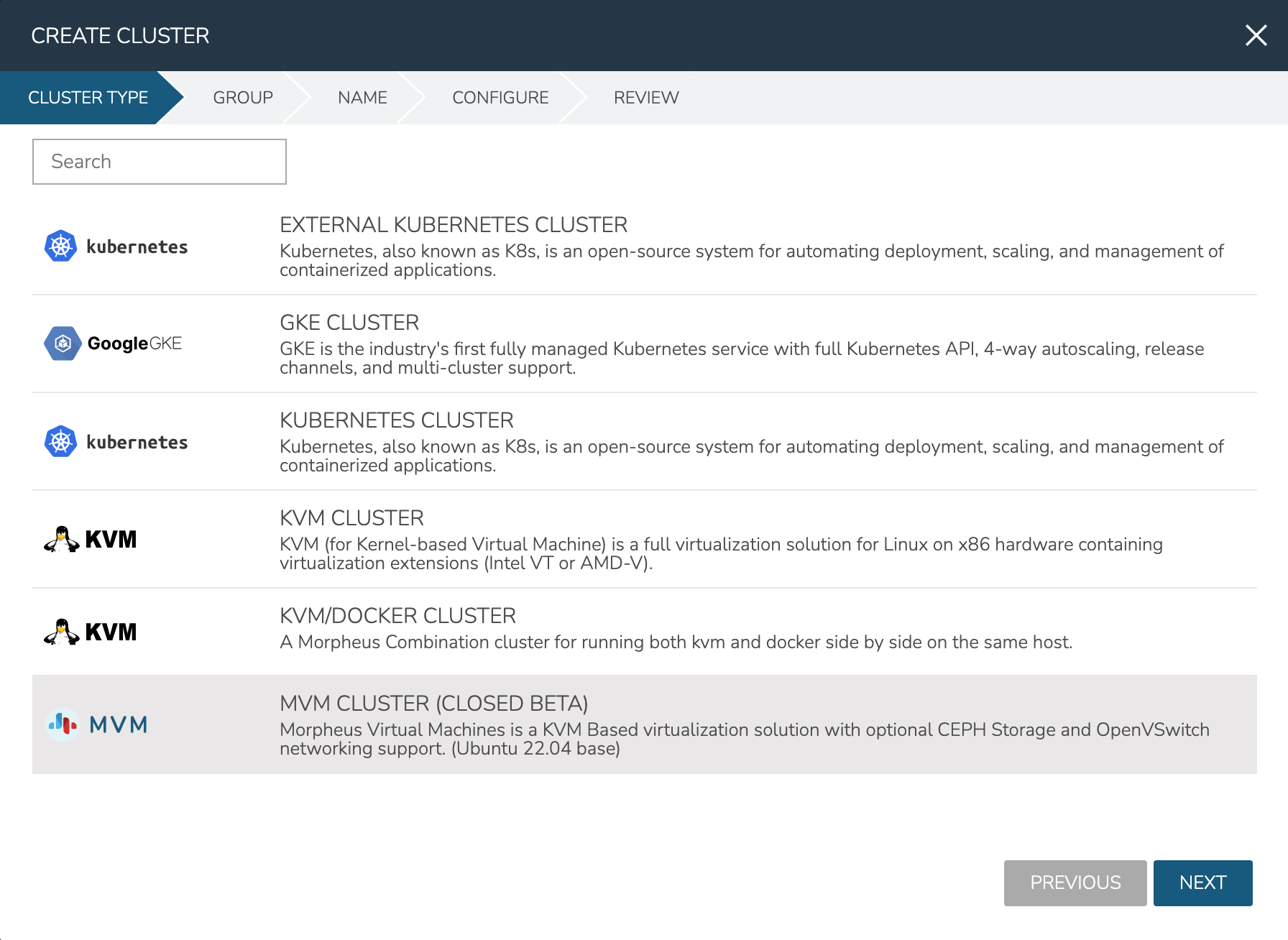

Cluster Types¶

Name |

Description |

Provider Type |

Kubernetes Cluster |

Provisions by default a Kubernetes cluster consisting of 1 Kubernetes Master and 3 Kubernetes Worker nodes. Additional system layouts available including Master clusters. Custom layouts can be created. |

Kubernetes |

Docker Cluster |

Provisions by default a Morpheus controlled Docker Cluster with 1 host. Additional hosts can be added. Custom layouts can be created. Existing Morpheus Docker Hosts are automatically converted to Clusters upon 4.0.0 upgrade. |

Docker |

EKS Cluster |

Amazon EKS (Elastic Kubernetes Service) Clusters |

Kubernetes |

AKS Cluster |

Azure AKS (Azure Kubernets Service) Clusters |

Kubernetes |

Ext Kubernetes |

Brings an existing (brownfield) Kubernetes cluster into Morpheus |

Kubernetes |

GKE Cluster |

Google Cloud GKE (Google Kubernetes Engine) Clusters |

Kubernetes |

KVM Cluster |

Onboard existing KVM Clusters. Manage them in Morpheus and utilize them as provisioning targets. See the KVM integration guide for more. |

KVM |

MVM Cluster |

Morpheus Virtual Machines, a KVM-based virtualization solution. This Cluster type is currently in closed beta. See the detailed section below on MVM Clusters for complete use documentation. |

KVM |

Note

Refer to clusterLayouts for supported Clouds per Cluster Type.

Requirements¶

Morpheus Role permission

Infrastructure: Clusters > Fullrequired for Viewing, Creating, Editing and Deleting Clusters.Morpheus Role permission

Infrastructure: Clusters > Readrequired for Viewing Cluster list and detail pages.

Cluster Permissions¶

- Cluster Permissions

Each Cluster has Group, Tenant and Service Plan access permissions settings (“MORE” > Permissions on the Clusters list page).

- Namespace Permissions

Individual Namespaces also have Group, Tenant and Service Plan access permissions settings

MVM Clusters¶

Important

MVM clusters are currently in a closed beta with a selected subset of the customer base. It is not for production use and is not available to appliances that are not part of the closed beta program. Expect that MVM Cluster capabilities, as well as this documentation, will change significantly over the coming months.

MVM virtualization solution is a hypervisor clustering technology utilizing KVM. Beginning with just a few basic Ubuntu boxes, Morpheus can create a cluster of hypervisor hosts complete with monitoring, failover, easy migration of workloads across the cluster, and zero-downtime maintenance access to hypervisor host nodes. All of this is backed by Morpheus Tenant capabilities, a highly-granular RBAC and policy engine, and Instance Type library with automation workflows.

Features¶

Host Features

Automated MVM cluster provisioning

Ceph storage configuration for multi-node clusters

Cluster and individual host monitoring

Add hosts to existing clusters

Console support for cluster hosts

Add, edit and remove networks and data stores from clusters

Gracefully take hosts out of service with maintenance mode

Migration of workloads across hosts

Configurable automatic failover of running workloads when a host is lost

Integration with Morpheus costing

Governance through Morpheus RBAC, Tenancy, and Policies

VM Features

Workload provisioning and monitoring

Console support for running workloads

Migration of VMs across hosts

Configure automatic failover for individual VMs in the event a host is lost

Reconfigure running workloads to resize plan, add/remove disks, and add/remove network interfaces

Backup and restore MVM workloads

Take snapshots and revert to snapshots

Morpheus library and automation support

Integration with Morpheus costing features

Base Cluster Details¶

An MVM cluster using the hyperconverged infrastructure (HCI) Layout consists of at least three hosts. Physical hosts are recommended to experience full performance of the MVM solution. In smaller environments, it is possible to create an MVM cluster with three nested virtual machines, a single physical host (non-HCI only), or a single nested virtual machine (non-HCI only) though performance may be reduced. With just one host it won’t be possible to migrate workloads between hosts or take advantage of automatic failover. Currently, a host must be a pre-existing Ubuntu 22.04 box with environment and host system requirements contained in this section. Morpheus handles cluster configuration by providing the IP address(es) for your host(s) and a few other details. Details on adding the cluster to Morpheus are contained in the next section.

Hardware Requirements

Operating System: Ubuntu 22.04

CPU: One or more 64-bit x86 CPUs, 1.5 GHz minimum with Intel VT or AMD-V enabled

Memory: 4 GB minimum. For non-converged Layouts, configure MVM hosts to use shared external storage, such as an NFS share or iSCSI target. Converged Layouts utilize Ceph for clustered storage and require a 4 GB minimum memory per Ceph disk

Disk Space: For converged storage, a data disk of at least 500 GB is required for testing. More storage will be needed for production clusters. An operating system disk of 15 GB is also required. Clusters utilizing non-converged Layouts can configure external storage (NFS, etc.) while Morpheus will configure Ceph for multi-node clusters

Network Connectivity: MVM hosts must be assigned static IP addresses. They also need DNS resolution of the Morpheus appliance and Internet access in order to download and install system packages for MVM dependencies, such as KVM, Open vSwitch (OVS), and more

Note

Ubuntu 22.04 uses netplan for networking. To configure a static IP address, change into the directory holding the config files (cd /etc/netplan) and edit the existing configuration file (/etc/netplan/50-cloud-init.yaml or /etc/netplan/00-installer-config.yaml or /etc/netplan/01-netcfg.yaml). If desired, backup the existing configuration prior to editing it (cp /etc/netplan/<file-name>.yaml /etc/netplan/<file-name>.yaml.bak). For additional information on configuration file formatting, refer to netplan documentation. Once the configuration is updated, validate and apply it (netplan try). The try command will validate the configuration and apply it if it’s valid. If invalid, it will automatically be rolled back.

Note

Clustered storage needs as much network bandwidth as possible. Network interfaces of at least 10 Gbps with jumbo frames enabled are required for clustered storage and for situations when all traffic is running through the management interface (when no compute or storage interface is configured). It’s highly likely that performance will be unacceptable with any lower configurations.

Description |

Source |

Destination |

Port |

Protocol |

|---|---|---|---|---|

Morpheus Agent communication with the Morpheus appliance |

MVM Host |

Morpheus appliance server |

443 |

TCP |

MVM host configuration and management |

Morpheus appliance server |

MVM Host |

22 |

TCP |

MVM interhost communication for clustered deployments |

MVM Host |

MVM Host |

22 |

TCP |

Morpheus server SSH access for deployed virtual machines |

Morpheus appliance server |

MVM-hosted virtual machines |

22 |

TCP |

Morpheus server WinRM (HTTP) access for deployed virtual machines |

Morpheus appliance server |

MVM-hosted virtual machines |

5985 |

TCP |

Morpheus server WinRM (HTTPS) access for deployed virtual machines |

Morpheus appliance server |

MVM-hosted virtual machines |

5986 |

TCP |

Ceph Storage |

MVM Host |

MVM Host |

3300 |

TCP |

Ceph Storage |

MVM Host |

MVM Host |

6789 |

TCP |

Ceph MDS/ODS |

MVM Host |

MVM Host |

6800-7300 |

TCP |

Example Cluster Deployment

In this example cluster, each host box consists of:

4 vCPU

16 GB memory

20 GB OS boot disk

250 GB data disk (deployed to

/dev/sdb)3 network interfaces for management, storage, and compute traffic (set to

eth0,eth1, andeth2, respectively)

Note

250 GB data disks used in this example are simply for demonstration purposes. A typical test cluster should consist of at least 500 GB storage and more will be required for production. Do not raid disks on physical servers. Multiple disks may be used and they will be added to the total Ceph storage in one large volume. In the DATA DEVICE configuration during cluster setup, give a comma-separated list of disk devices if required.

MVM clusters must also live in Morpheus-type Clouds (See Infrastructure > Clouds). A pre-existing Morpheus Cloud may be used or a new Cloud could be created to handle MVM management.

Provisioning the Cluster¶

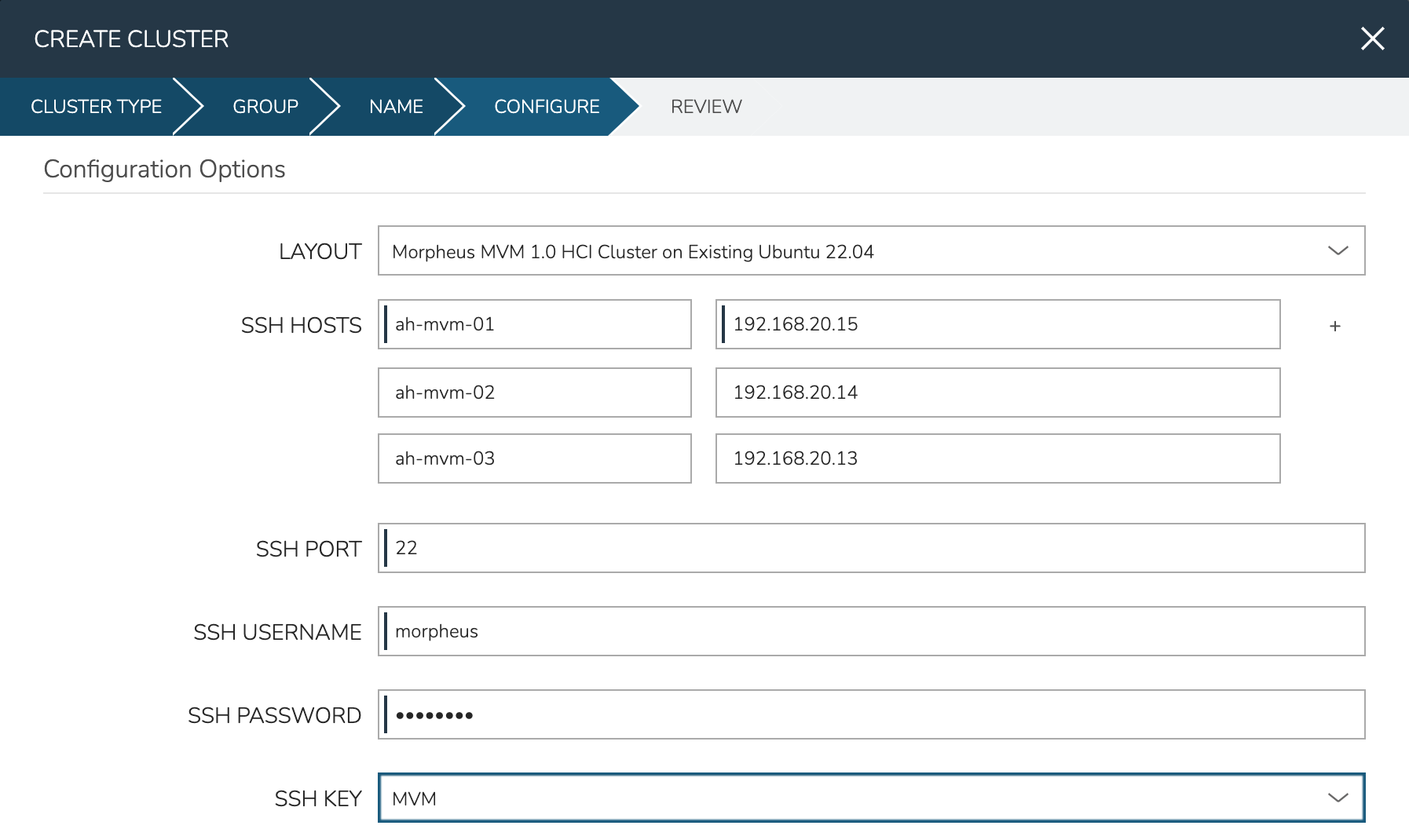

As mentioned in the previous section, this example is starting with three provisioned Ubuntu 22.04 boxes. I also have a Morpheus-type Cloud to house the cluster. Begin the cluster creation process from the Clusters list page (Infrastructure > Clusters). Click + ADD CLUSTER and select “MVM Cluster”.

Morpheus gives the option to select a hyperconverged infrastructure (HCI) LAYOUT or non-HCI. In this example, the HCI Layout is used (requires a three-node minimum). Next, configure the names and IP addresses for the host boxes (SSH HOST). The SSH HOST name configuration is simply a display name in Morpheus, it does not need to be a hostname. By default, configuration space is given for three hosts which is what this example cluster will have. You must at least configure one and it’s possible to add more by clicking the (+) button. The SSH PORT is pre-configured for port 22, change this value if applicable in your environment. Next, set a pre-existing user on the host boxes (SSH USERNAME and SSH PASSWORD) and SSH KEY. Use a regular user with sudo access.

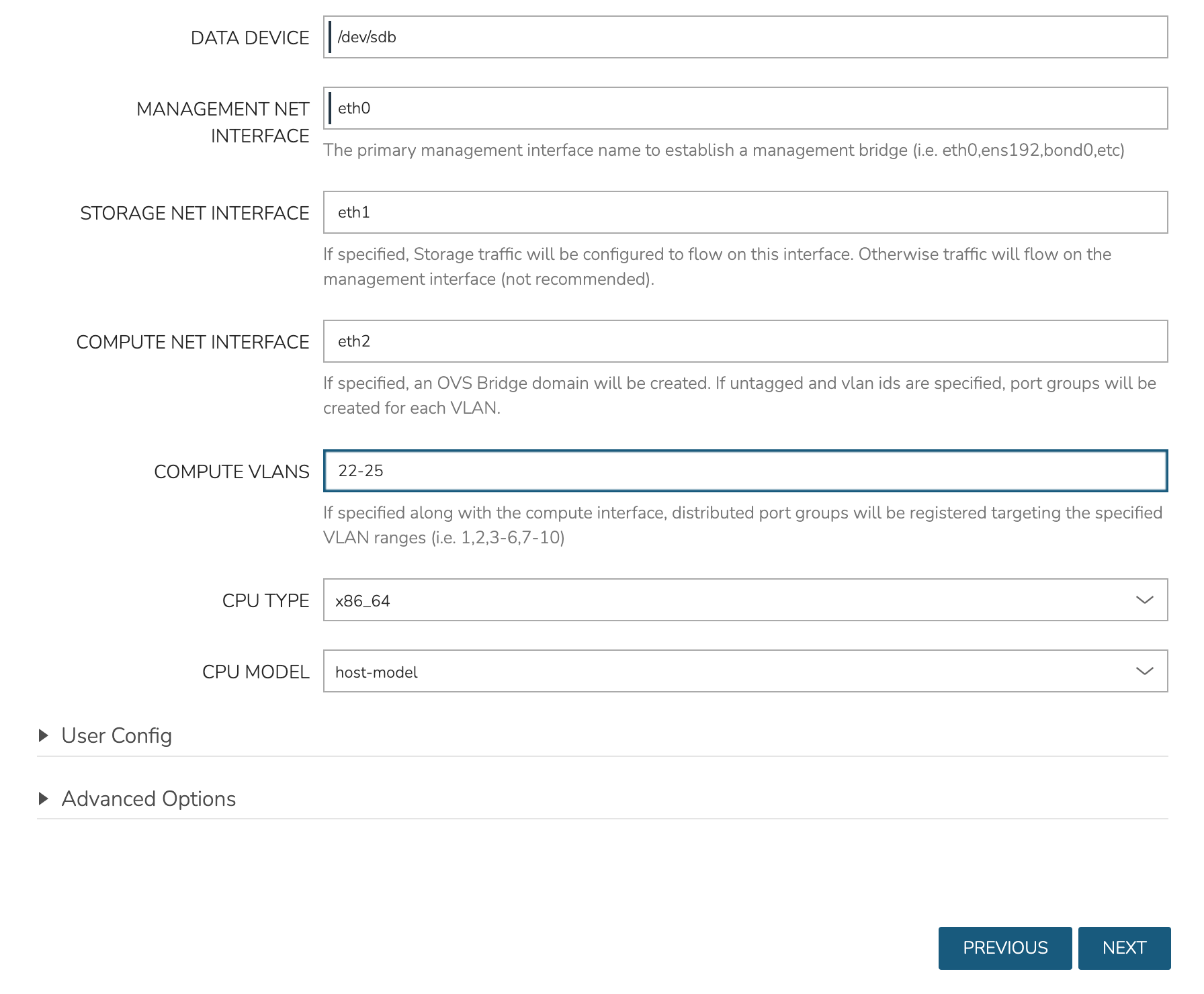

In the next part of the modal, you’ll configure the storage devices and network interfaces. When Ceph initializes, it needs to be pointed to an initial data device (or devices). Configure this in the DATA DEVICE field. Multiple devices may be given in a comma-separated list and will be added to the total Ceph storage as one large volume. Find your disk names, if needed, with the lsblk command. In my case, the target device is located at /dev/sdb.

Though not strictly required, it’s recommended to have separate network interfaces to handle cluster management, storage traffic, and compute. In this example case, eth0 is configured as the MANAGEMENT NET INTERFACE which handles communication between the cluster hosts. eth1 is configured as the STORAGE NET INTERFACE and eth2 is configured as the COMPUTE NET INTERFACE. The COMPUTE VLANS field can take a single value (ex. 1) or a range of values (ex. 22-25). This will create OVS port group(s) selectable as networks when provisioning workloads to the cluster. If needed, you can find your network interface names with the ip a command.

Finally, only one CPU TYPE is currently supported (x86_64) though this may change in the future. For CPU MODEL configuration, we surface the entire database of model configurations from libvirt. If unsure or if you don’t know of a specific reason to choose one or the other, select host-model which is the default option.

At this point we’ve kicked off the process for configuring the cluster nodes. Drill into the Cluster detail page and click on the History tab. Here we can monitor the progress of configuring the cluster. Morpheus will run scripts to install KVM, install Ceph, install OVS, and to prepare the cluster. In just a short time, the cluster provisioning should complete and the cluster will be ready to deploy workloads.

Provisioning a Workload¶

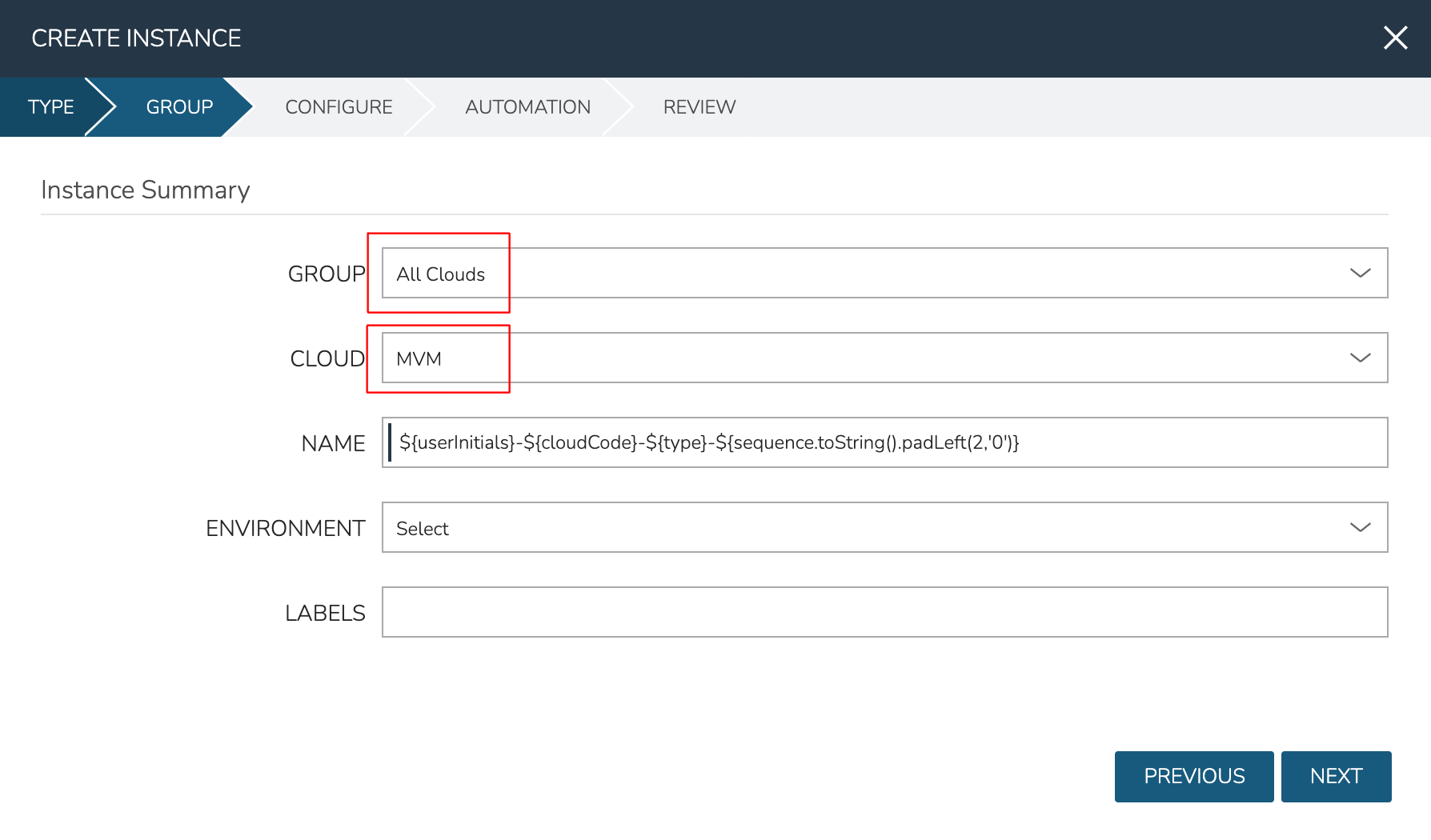

At this point, the cluster is ready for workloads to be provisioned to it. The system default Ubuntu Instance Type contains a compatible Layout for MVM deployment. Add an Instance from the Instances list page (Provisioning > Instances). After selecting the Instance Type, choose a Group that allows for selection of the Morpheus-type Cloud containing the MVM cluster.

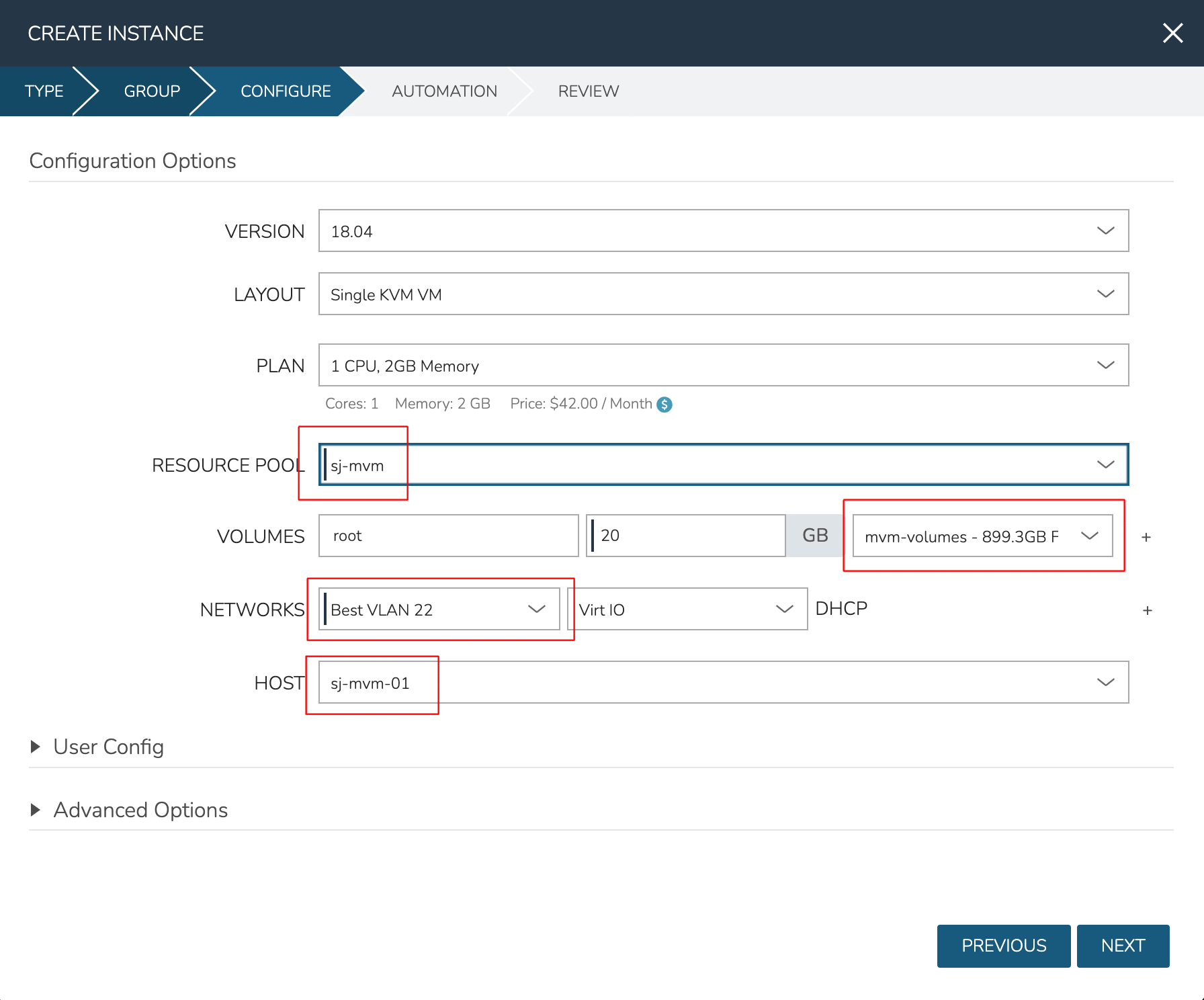

After moving to the next tab, select a Plan based on resource needs. From the RESOURCE POOL field, select the desired MVM cluster. When configuring VOLUMES for the new workload, note that space can be claimed from the Ceph volume. Within NETWORKS, we can add the new workload to one of the VLANS set up as part of cluster creation. Finally, note that we can choose the HOST the workload should run on.

Review and complete the provisioning wizard. After a short time, the workload should be up and running. With a workload now running on the cluster, we can take a look at some of the monitoring, migration, failover, and other actions we can take for workloads running on MVM clusters.

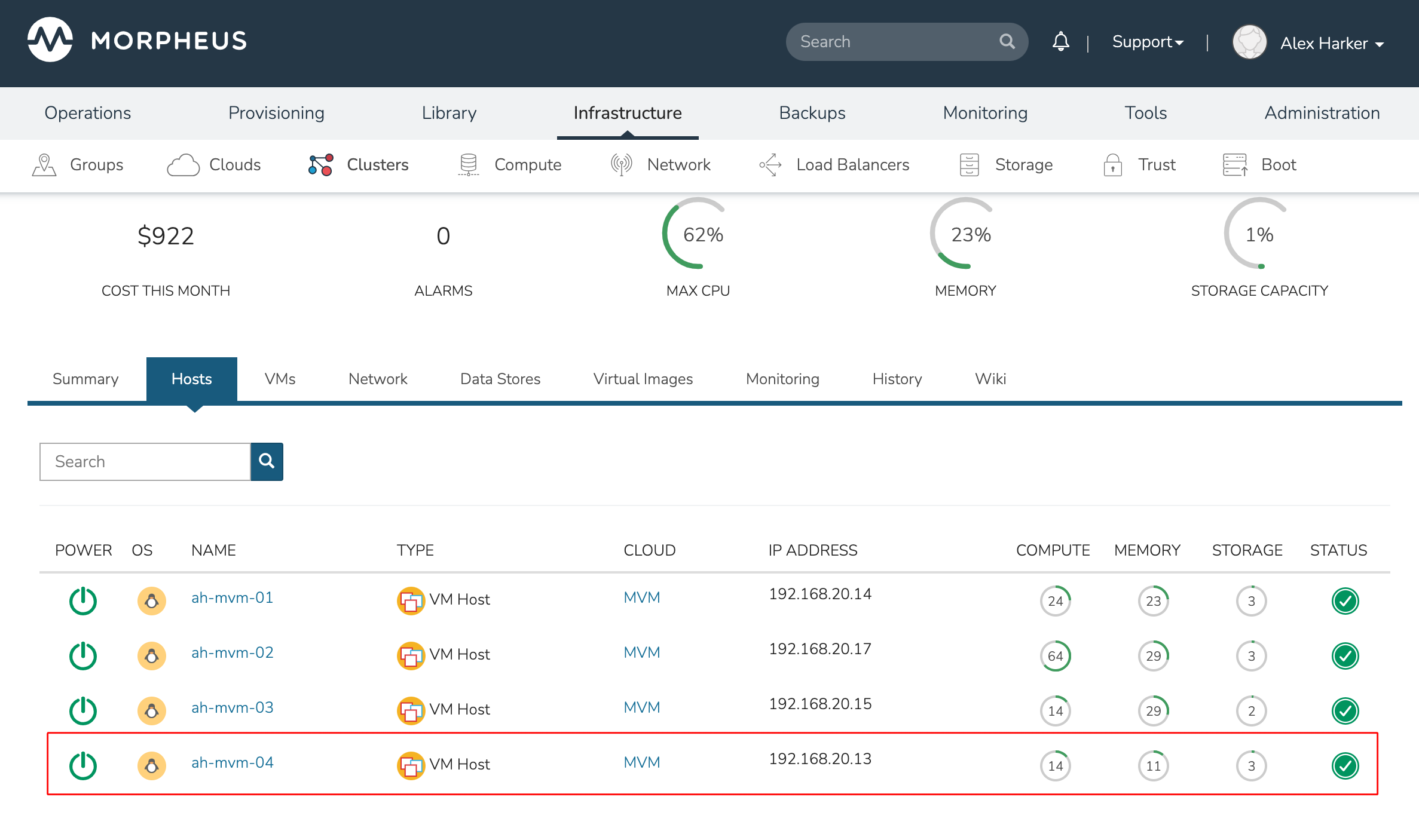

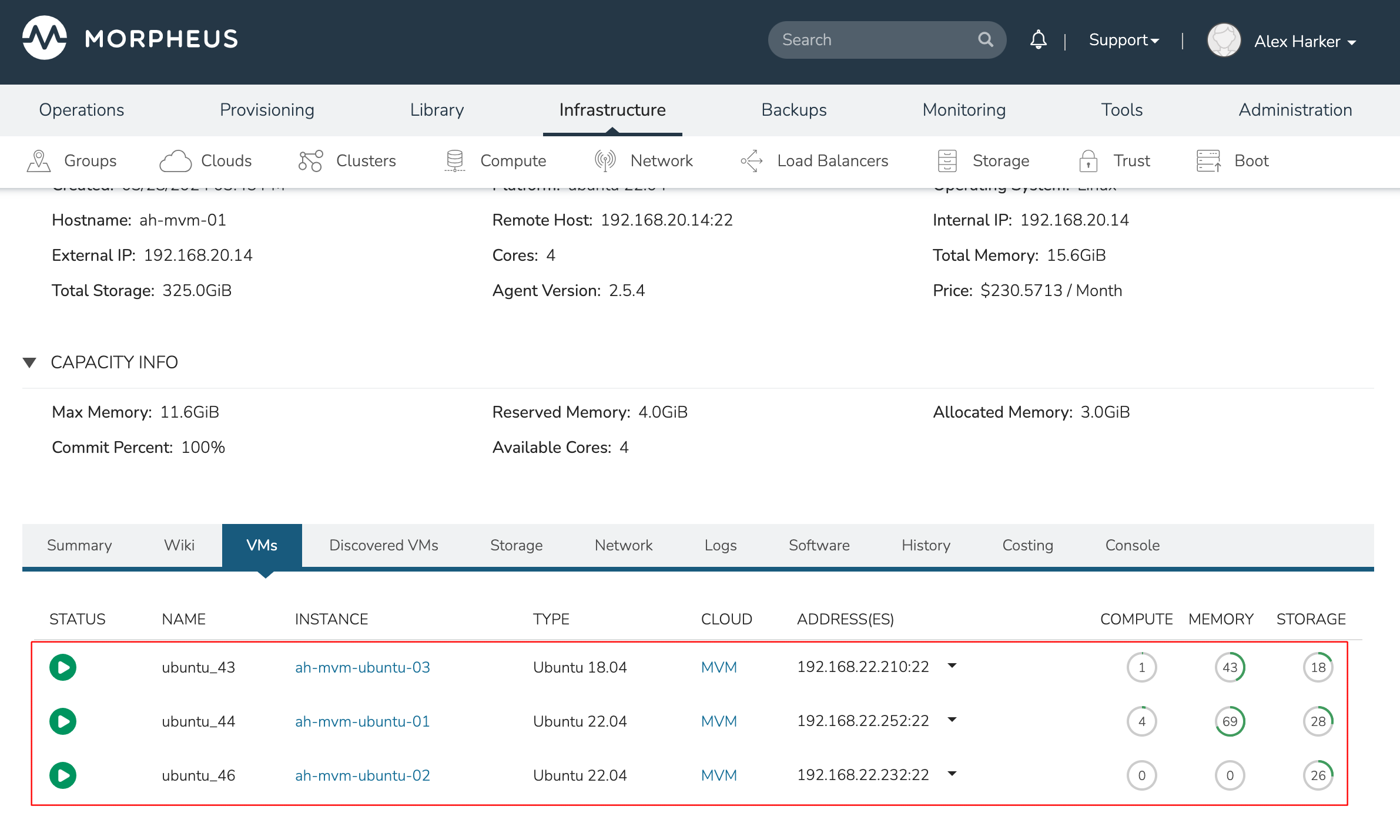

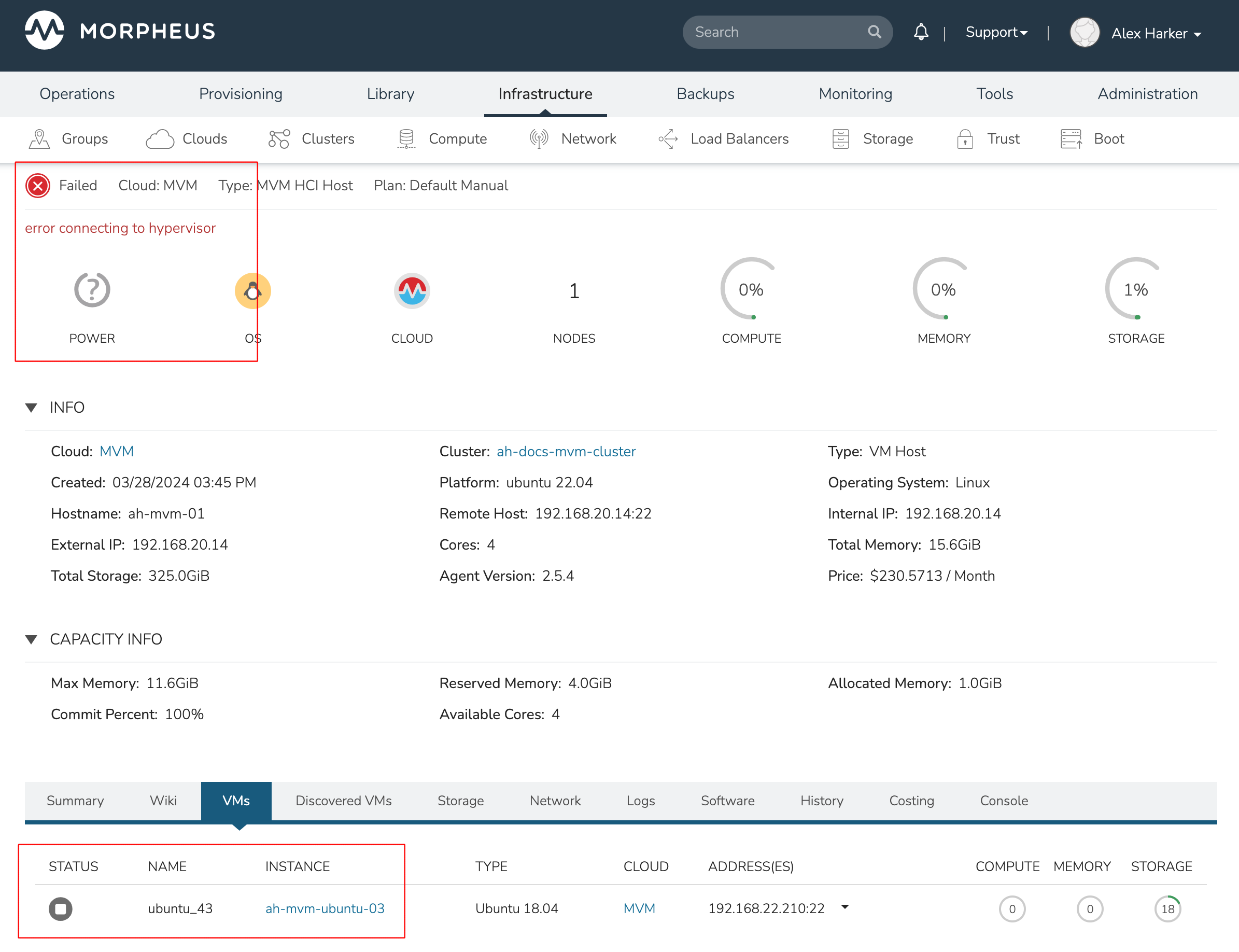

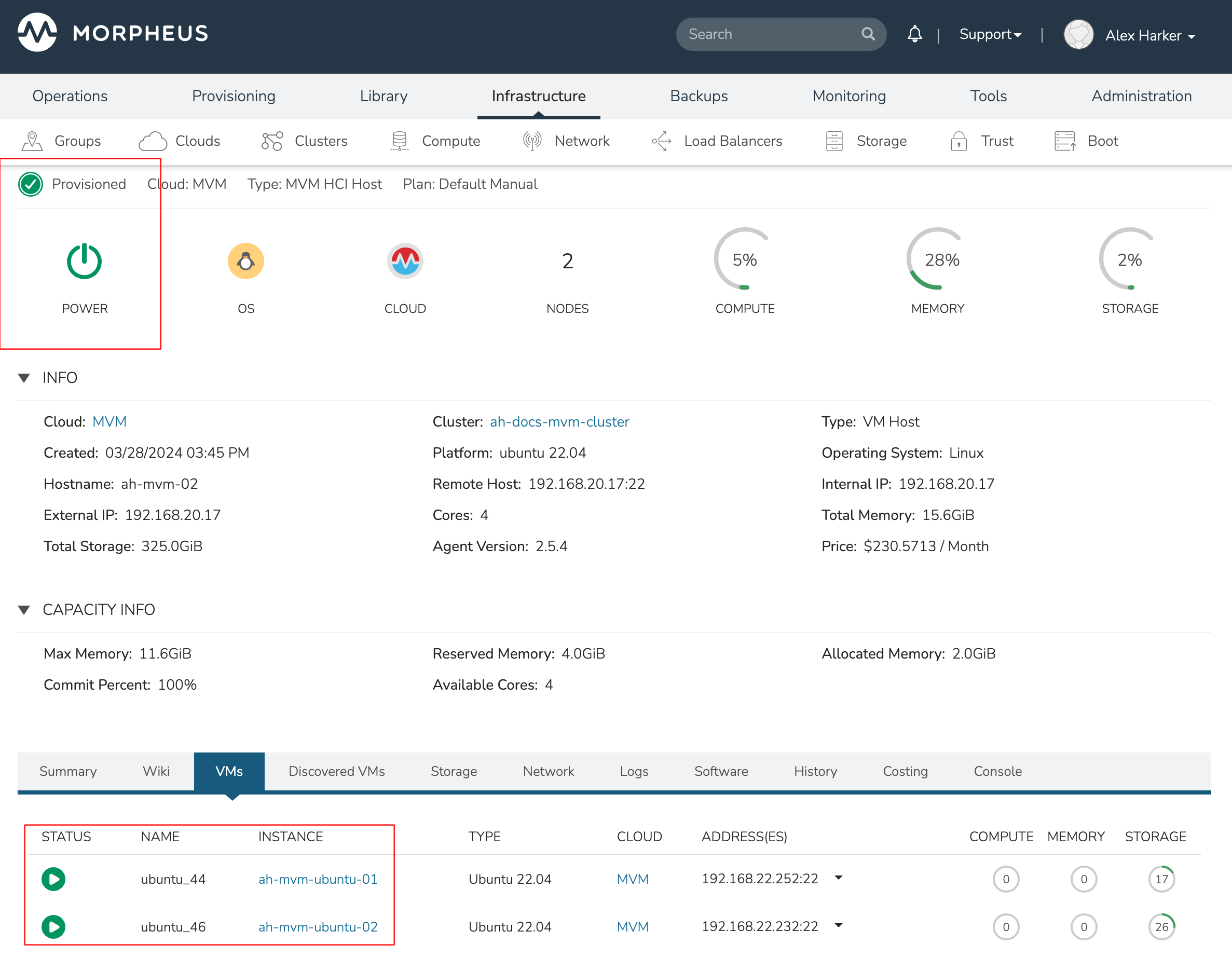

Monitoring the Cluster¶

With the server provisioned and a workload running, take a look at the monitoring and actions capabilities on the cluster detail page (Infrastructure > Clusters, then click on the new MVM cluster). View cluster performance and resource usage (Summary and Monitoring tabs), drill into individual hosts (Hosts tab), see individual workloads (VMs tab), and more.

Moving Workloads Between Hosts

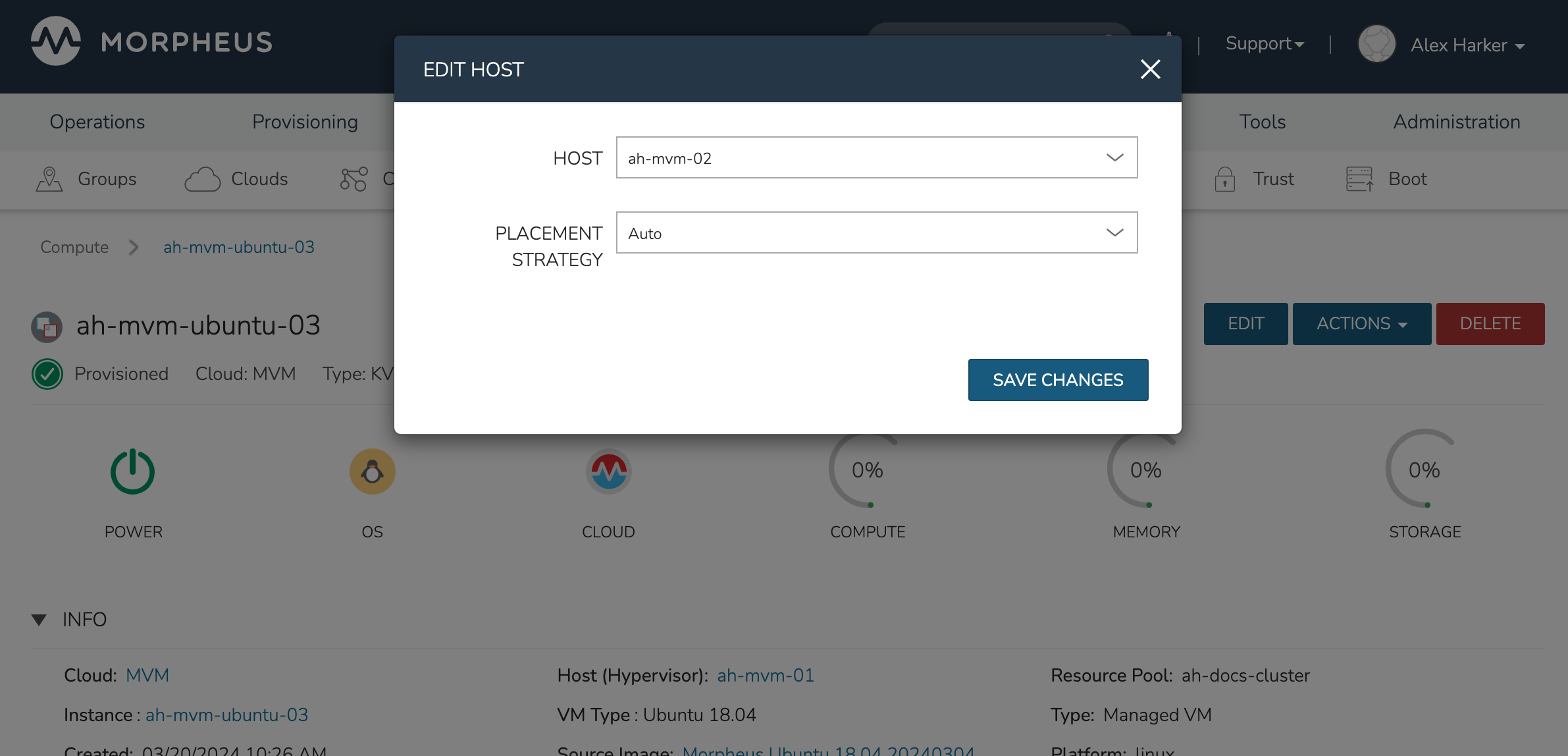

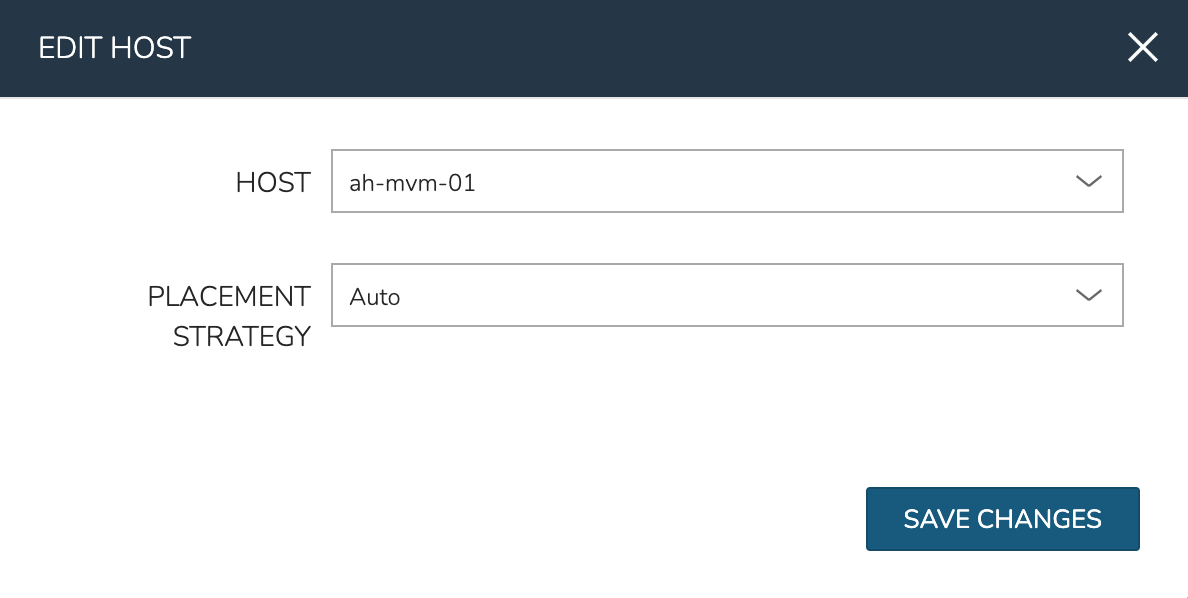

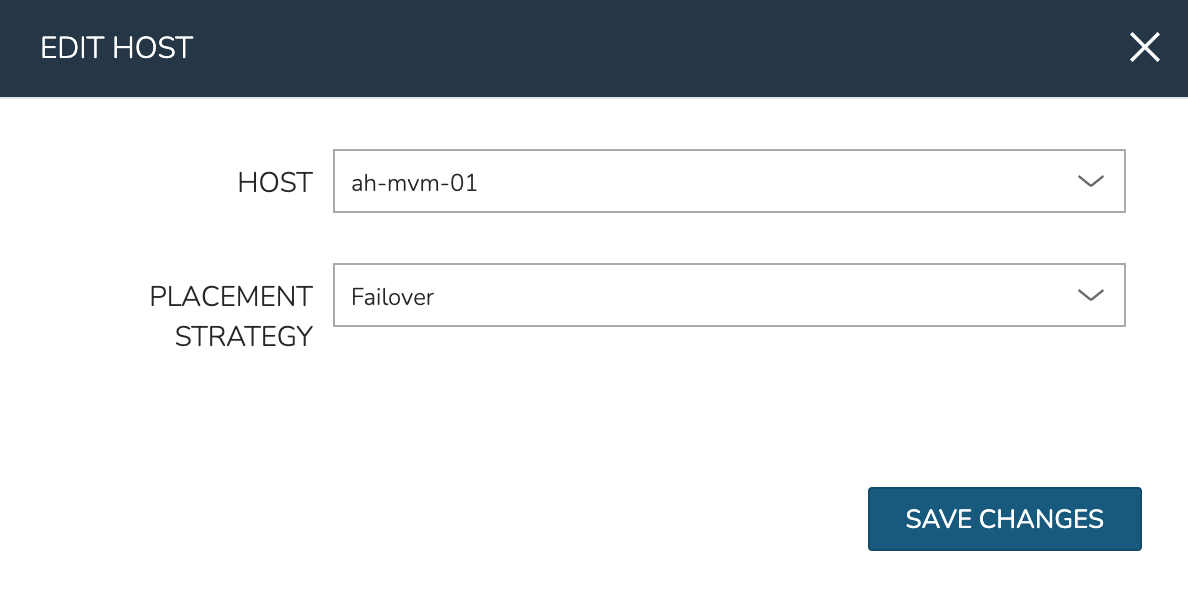

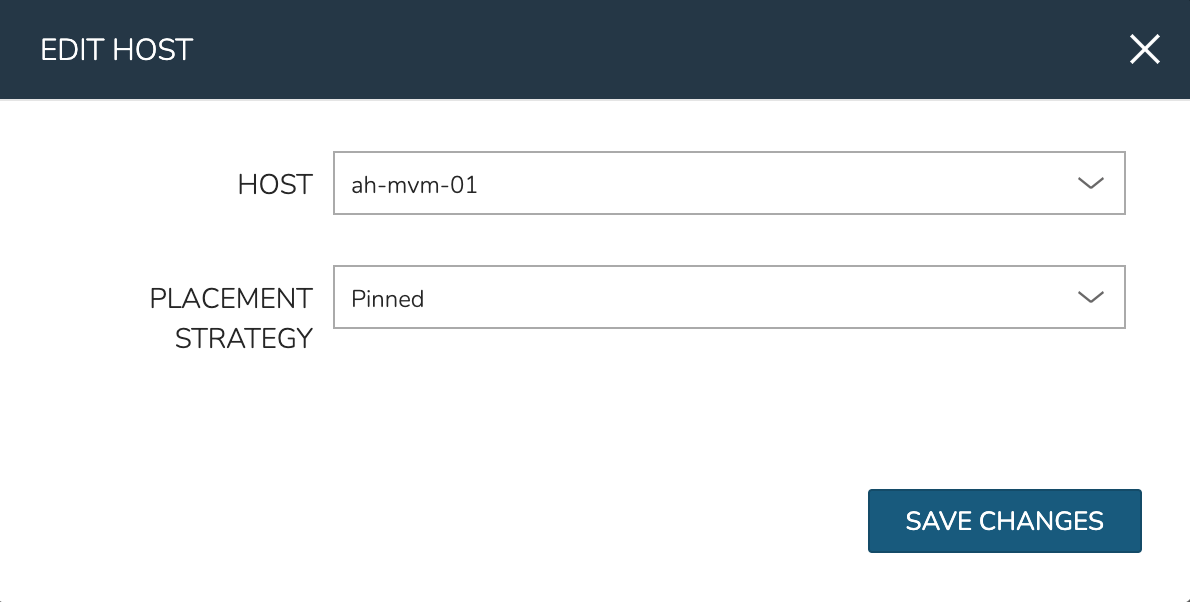

To manually move workloads between hosts, drill into the detail page for the VM (from the VMs tab of the cluster detail page). Click ACTIONS and select “Manage Placement”. Choose a different host and select from the following placement strategies:

Auto: Manages VM placement based on load

Failover: Moves VMs only when failover is necessary

Pinned: Will not move this workload from the selected host

Within a short time, the workload is moved to the new host.

Adding hosts

The process of adding hosts to a pre-existing cluster is very similar to the process of provisioning the cluster initially. The requirements for the new worker node will be identical to the nodes initially added when the cluster was first provisioned. See the earlier sections in this guide for additional details on configuring the worker nodes.

To add the host, begin from the MVM Cluster detail page (selected from the list at Infrastructure > Clusters). From the Cluster detail page, click ACTIONS and select “Add Worker”. Configurations required are the same as those given when the cluster was first created. Refer to the section above on “Provisioning the Cluster” for a detailed description of each configuration.

Once Morpheus has completed its configuration scripts and joined the new worker node to the cluster, it will appear in a ready state within the Hosts tab of the Cluster detail page. When provisioning workloads to this Cluster in the future, the new node will be selectable as a target host for new Instances. It will also be an available target for managing placement of existing VMs running on the cluster.

Note

It’s useful to confirm all scripts related to creating the new host and joining the new host to the cluster completed successfully. To confirm, navigate to the detail page for the new host (Infrastructure > Clusters > Selected Cluster > Hosts Tab > Selected Host) and click on the History tab. Confirm all scripts, even those run on the pre-existing hosts, completed successfully as it’s possible the new host was added successfully (green status) but failed in joining the cluster. When such a situation occurs it may appear adding the new host was successful though it will not be possible to provision workloads onto it due to not joining the cluster successfully.

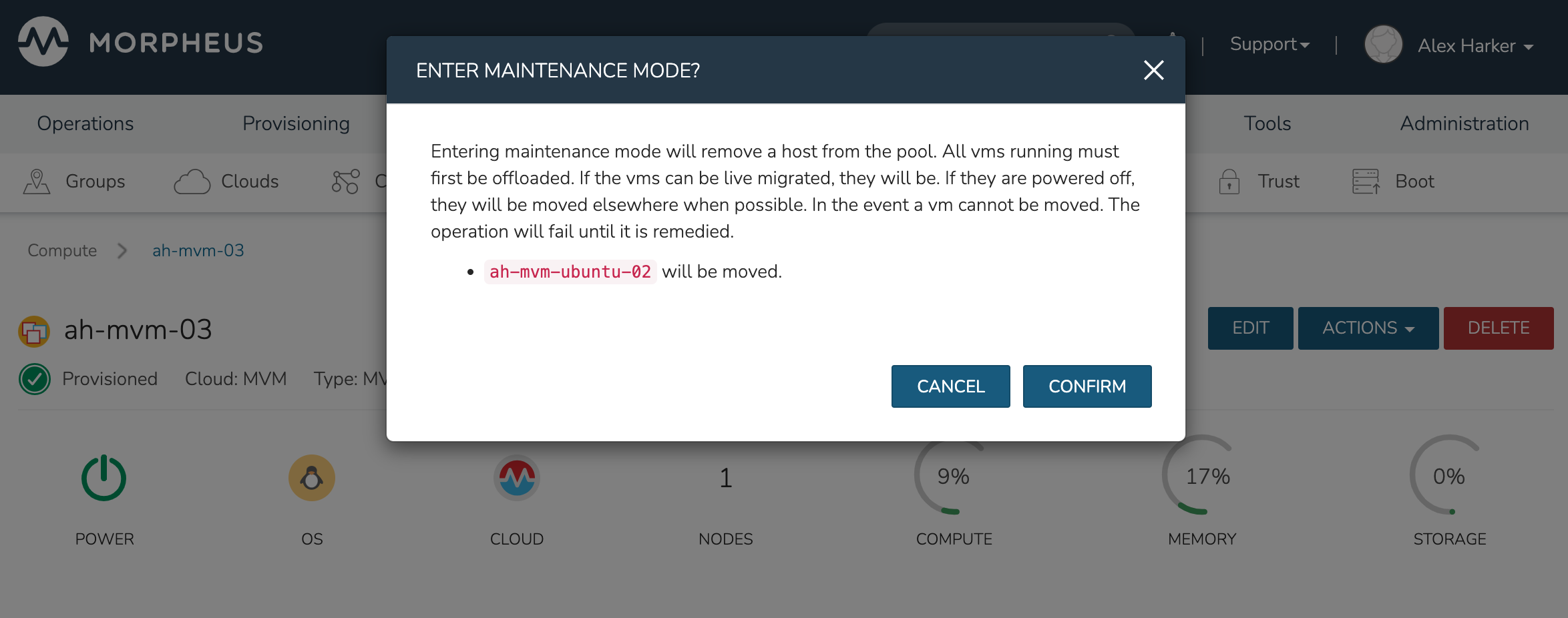

Maintenance Mode

MVM cluster hosts can be easily taken out of service for maintenance when needed. From the host detail page, click ACTIONS and then click “Enter Maintenance.” When entering maintenance mode, the host will be removed from the pool. Live VMs that can be migrated will be moved to new hosts. VMs that are powered off will also be moved when possible. When a live VM cannot be moved (such as if it’s “pinned” to the host), the host will not go into maintenance mode until that situation is cleared. You could manually move a VM to a new host or you could power it down if it’s non-essential. After taking that action, attempt to put the host into maintenance mode once again. Morpheus UI provides a helpful dialog which shows you which VMs live on the host are to be moved as the host goes into maintenance mode. When maintenance has finished, go back to the ACTIONS menu and select “Leave Maintenance.”

Failover

MVM supports automatic failover of running workloads in the event of the loss of a host. Administrators can control the failover behavior through the “Manage Placement” action on any running VM. From the VM detail page, click ACTIONS and select “Manage Placement”. Any VM with a placement strategy of “Auto” or “Failover” will be eligible for an automatic move in the event its host is lost. When the loss of a host does occur, the workload will be up and running from a different cluster host within just a short time if it’s configured to be moved during an automatic failover event. Any VMs pinned to a lost host will not be moved and will not be accessible if the host is lost. When the host is restored, those VMs will be in a stopped state and may be restarted if needed.

This three-node cluster has three VMs running on the first host:

Each of these VMs is configured for a different failover strategy. When the host is lost, we should expect to see the first two VMs moved to an available host (since they have the “Auto” and “Failover” placement strategies, respectively). We should not see the third VM moved.

After loss of the host these three VMs were running on, we can see the lost host still has one associated VM in a stopped state. The other two VMs are running on a second host which is still available.

When the lost host returns, the moved VMs will come back to their original host. The third VM is associated with this host as well and is in a stopped state until it is manually restarted.

Decommissioning a CEPH-backed Host¶

Morpheus MVM clusters utilize global pools and for that reason, we need to remove the object storage daemon (OSD) from each host manually prior to decommissioning the host and removing it from the cluster.

First, put the host into maintenance mode and allow time for any running VMs to be migrated to other hosts. See the section above, if needed, for additional details on maintenance mode.

Outing the OSDs

Begin by checking the cluster health. If the cluster is not in a healthy state, an OSD should not be removed:

ceph -s

You should see something similar to the following:

$ ceph -s

cluster:

id: bxxxx-bxxxxx-4xxx...

health: HEALTH_OK

Important

Do not remove an OSD if the cluster health does not return HEALTH_OK.

Get the OSD IDs. The following command will return a map of OSDs and their ID values:

ceph osd df tree

We’re now ready to out the OSD, do so with the following command:

ceph osd out osd.<osd-id>

Wait for the cluster to rebalance. Do not remove any additional OSDs until the cluster has rebalanced. As above, you can use ceph -s to check cluster status. Wait until something like this:

data:

volumes: 1/1 healthy

pools: 5 pools, 593 pgs

objects: 6.69k objects, 19 GiB

usage: 48 GiB used, 2.9 TiB / 2.9 TiB avail

pgs: 677/20079 objects degraded (3.372%)

1115/20079 objects misplaced (5.553%)

567 active+clean

13 active+recovery_wait+degraded

6 active+remapped+backfill_wait

6 active+recovery_wait+undersized+degraded+remapped

1 active+recovering+undersized+degraded+remapped

…becomes something like this:

data:

volumes: 1/1 healthy

pools: 5 pools, 593 pgs

objects: 6.69k objects, 19 GiB

usage: 53 GiB used, 2.9 TiB / 2.9 TiB avail

pgs: 593 active+clean

This process must be completed for each OSD that is to be removed. Once again, wait for the cluster to rebalance between each OSD removal.

Stopping OSD service

We can now stop and remove the OSD service for each OSD that should be removed. Stop the OSD service:

systemctl stop ceph-osd@<osd-id>.service

Remove the OSD service:

systemctl disable ceph-osd@<osd-id>.service

Removing OSDs from the CRUSH map

Remove the OSDs from the CRUSH map:

ceph osd crush remove ods.<osd-id>

This must be repeated for each OSD that should be removed. Next, validate the removal:

ceph osd crush tree

At this point once again, wait for the cluster rebalance to complete. Run ceph -s and look for a healthy state similar to the following:

data:

volumes: 1/1 healthy

pools: 5 pools, 593 pgs

objects: 6.69k objects, 19 GiB

usage: 53 GiB used, 2.9 TiB / 2.9 TiB avail

pgs: 593 active+clean

Remove the Ceph Monitor (ceph-mon) service

First find the service:

systemctl --type=service --state=running | grep ceph-mon

The service should look something like: ceph-mon@<hostname provided at cluster provision time>.service

Stop the service:

systemctl stop ceph-mon@<hostname>.service

Remove the monitor by its ID. The ID is the part between “ceph-mon@” and “.service”. Generally, this is the hostname.

ceph mon remove <hostname>

Remove the hostname from CRUSH

ceph osd crush rm <hostname>

Check the cluster health once again to confirm the cluster is in a healthy state:

ceph -s

Final Steps

Cleanup the OSD auth. Repeat this step for each OSD that must be removed.

ceph auth del osd.<osd-id>

Validate the removal:

ceph auth list

Remove the last of the data and repeat this step for each OSD that should be removed:

ceph osd rm <osd-id>

Important

Note that the above command does not prepend “osd.” before the OSD ID.

At this point you can now delete the host cluster from Morpheus.

Kubernetes Clusters¶

Requirements¶

Agent installation is required for Master and Worker Nodes. Refer to Morpheus Agent section for additional information.

- Access to Cloud Front, Image copy access and permissions for System and Uploaded Images used in Cluster Layouts

Image(s) used in Cluster Layouts must either exist in destination cloud/resource or be able to be copied to destination by Morpheus, typically applicable for non-public clouds. For the initial provision, Morpheus System Images are streamed from Cloud Front through Morpheus to target destination. Subsequent provisions clone the local Image.

System Kubernetes Layouts require Master and Worker nodes to access to the following over 443 during K8s install and configuration:

Morpheus Role permission

Infrastructure: Clusters > Fullrequired for Viewing, Creating, Editing and Deleting Clusters.Morpheus Role permission

Infrastructure: Clusters > Readrequired for Viewing Cluster list and detail pages.

Creating Kubernetes Clusters¶

Provisions a new Kubernetes Cluster in selected target Cloud using selected Layout.

Note

When deploying a highly-available Kubernetes cluster, it’s important to note that Morpheus does not currently auto-deploy a load balancer. Additionally, when Morpheus runs the kubeadm init command in the background during cluster provisioning, it also sets the --control-plane-endpoint flag to the first control plane node. This is a hard-coded behavior. To accomplish a highly-available cluster, users may wish to update the configured control plane endpoint, such as to a DNS name pointing to a load balancer. We are currently investigating updates to the product that would allow the user to specify such a DNS name prior to kicking off cluster provisioning. Additionally, users can circumvent this issue by configuring and deploying their own custom Cluster Layouts.

Morpheus maintains a number of default Kubernetes Cluster Layouts which are updated frequently to offer support for current versions. AKS & GKE Kubernetes versions will dynamically update to the providers supported versions. Morpheus also supports creation of custom Kubernetes Cluster Layouts, a process which is described in detail in a later section.

To create a new Kubernetes Cluster:

Navigate to

Infrastructure > ClustersSelect + ADD CLUSTER

Select

Kubernetes ClusterSelect a Group for the Cluster

Select NEXT

Populate the following:

- CLOUD

Select target Cloud

- CLUSTER NAME

Name for the Kubernetes Cluster

- RESOURCE NAME

Name for Kubernetes Cluster resources

- DESCRIPTION

Description of the Cluster

- VISIBILITY

- Public

Available to all Tenants

- Private

Available to Master Tenant

- LABELS

Internal label(s)

Select NEXT

Populate the following:

Note

VMware sample fields provided. Actual options depend on Target Cloud

- LAYOUT

Select from available layouts. System provided layouts include Single Master and Cluster Layouts.

- PLAN

Select plan for Kubernetes Master

- VOLUMES

Configure volumes for Kubernetes Master

- NETWORKS

Select the network for Kubernetes Master & Worker VM’s

- CUSTOM CONFIG

Add custom Kubernetes annotations and config hash

- CLUSTER HOSTNAME

Cluster address Hostname (cluster layouts only)

- POD CIDR

POD network range in CIDR format ie 192.168.0.0/24 (cluster layouts only)

- WORKER PLAN

Plan for Worker Nodes (cluster layouts only)

- NUMBER OF WORKERS

Specify the number of workers to provision

- LOAD BALANCER

Select an available Load Balancer (cluster layouts only) }

- User Config

- CREATE YOUR USER

Select to create your user on provisioned hosts (requires Linux user config in Morpheus User Profile)

- USER GROUP

Select User group to create users for all User Group members on provisioned hosts (requires Linux user config in Morpheus User Profile for all members of User Group)

- Advanced Options

- DOMAIN

Specify Domain override for DNS records

- HOSTNAME

Set hostname override (defaults to Instance name unless an Active Hostname Policy applies)

Select NEXT

Select optional Workflow to execute

Select NEXT

Review and select COMPLETE

The Master Node(s) will provision first.

- Upon successful completion of VM provision, Kubernetes scripts will be executed to install and configure Kubernetes on the Masters.

Note

Access to the sites listed in the Requirements section is required from Master and Worker nodes over 443

After Master or Masters are successfully provisioned and Kubernetes is successfully installed and configured, the Worker Nodes will provision in parallel.

- Provision status can be viewed:

From the Status next to the Cluster in

Infrastructure > ClustersStatus bar with eta and current step available on Cluster detail page, accessible by selecting the Cluster name from

Infrastructure > Clusters

All process status and history is available - From the Cluster detail page History tab, accessible by selecting the Cluster name from

Infrastructure > Clustersand the History tab - From Operations - Activity - History - Individual process output available by clicking i on target process

Once all Master and Worker Nodes are successfully provisioned and Kubernetes is installed and configured, the Cluster status will turn green.

Important

Cluster provisioning requires successful creation of VMs, Agent Installation, and execution of Kubernetes workflows. Consult process output from

``Infrastructure > Clusters - Detailsand morpheus-ui current logs atAdministration - Health - Morpheus Logsfor information on failed Clusters.

Intra-Kubernetes Cluster Port Requirements¶

The table below includes port requirements for the machines within the cluster (not for the Morpheus appliance itself). Check that the following ports are open on Control-plane and Worker nodes:

Protocol |

Direction |

Port Range |

Purpose |

Used By |

|---|---|---|---|---|

TCP |

Inbound |

6443 |

Kubernetes API Server |

All |

TCP |

Inbound |

6783 |

Weaveworks |

|

TCP |

Inbound |

2379-2380 |

etcd server client API |

kube-apiserver, etcd |

TCP |

Inbound |

10250 |

kubelet API |

Self, Control plane |

TCP |

Inbound |

10251 |

kube-scheduler |

Self |

TCP |

Inbound |

10252 |

kube-controller-manager |

Self |

Protocol |

Direction |

Port Range |

Purpose |

Used By |

|---|---|---|---|---|

TCP |

Inbound |

10250 |

kubelet API |

Self, Control plane |

TCP |

Inbound |

30000-32767 |

NodePort Services |

All |

Adding Worker Nodes¶

Navigate to

Infrastructure - ClustersSelect

v MOREfor the target clusterSelect

ADD (type) Kubernetes Worker- NAME

Name of the Worker Node. Auto=populated with

${cluster.resourceName}-worker-${seq}- DESCRIPTION

Description of the Worker Node, displayed in Worker tab on Cluster Detail pages, and on Worker Host Detail page

- CLOUD

Target Cloud for the Worker Node.

Select NEXT

Populate the following:

Note

VMware sample fields provided. Actual options depend on Target Cloud

- SERVICE PLAN

Service Plan for the new Worker Node

- NETWORK

Configure network options for the Worker node.

- HOST

If Host selection is enabled, optionally specify target host for new Worker node

- FOLDER

- Optionally specify target folder for new Worker node

- Advanced Options

- DOMAIN

Specify Domain override for DNS records

- HOSTNAME

Set hostname override (defaults to Instance name unless an Active Hostname Policy applies)

Select NEXT

Select optional Workflow to execute

Select NEXT

Review and select COMPLETE

Note

Ensure there is a default StorageClass available when using a Morpheus Kubernetes cluster with OpenEBS so that Kubernetes specs or HELM templates that use a default StorageClass for Persistent Volume Claims can be utilised.

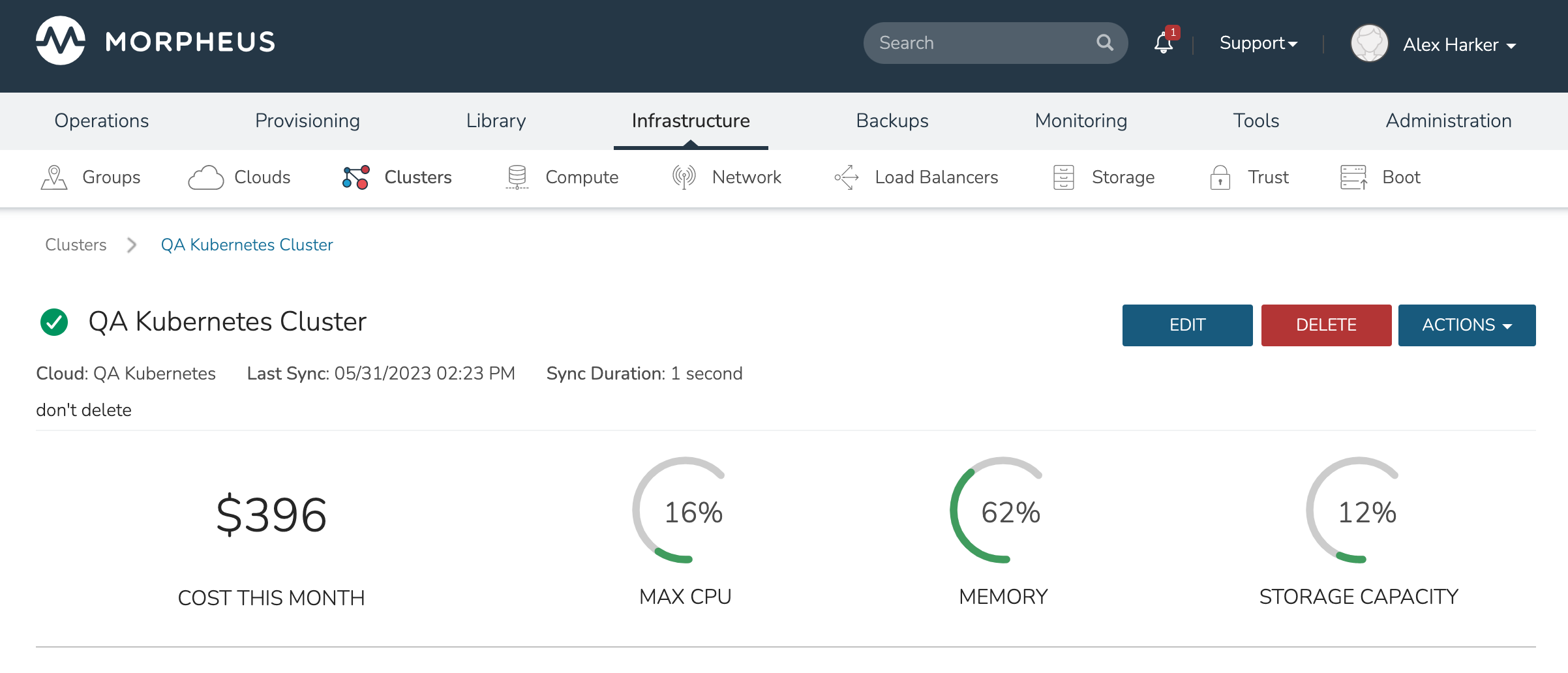

Kubernetes Cluster Detail Pages¶

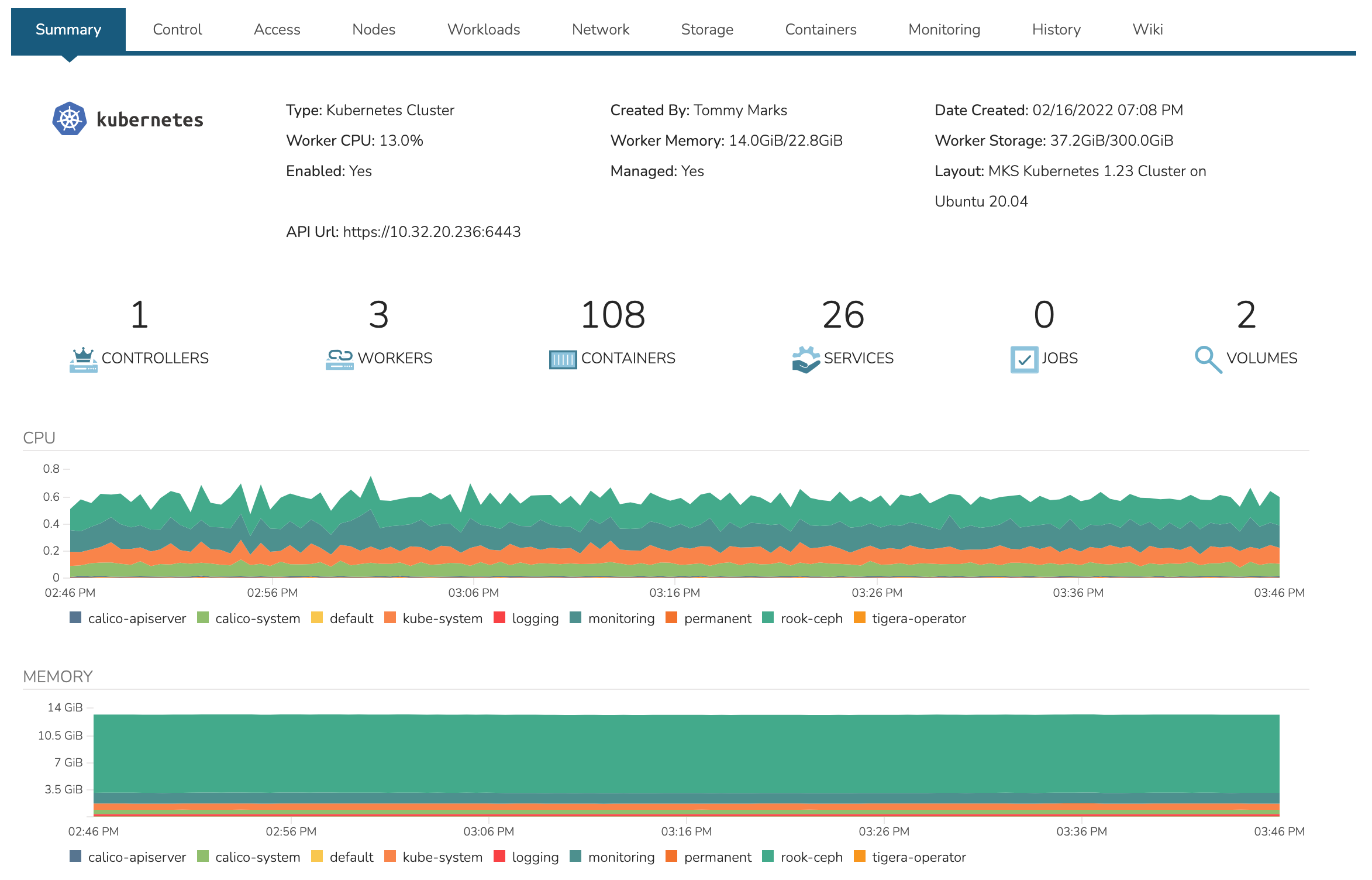

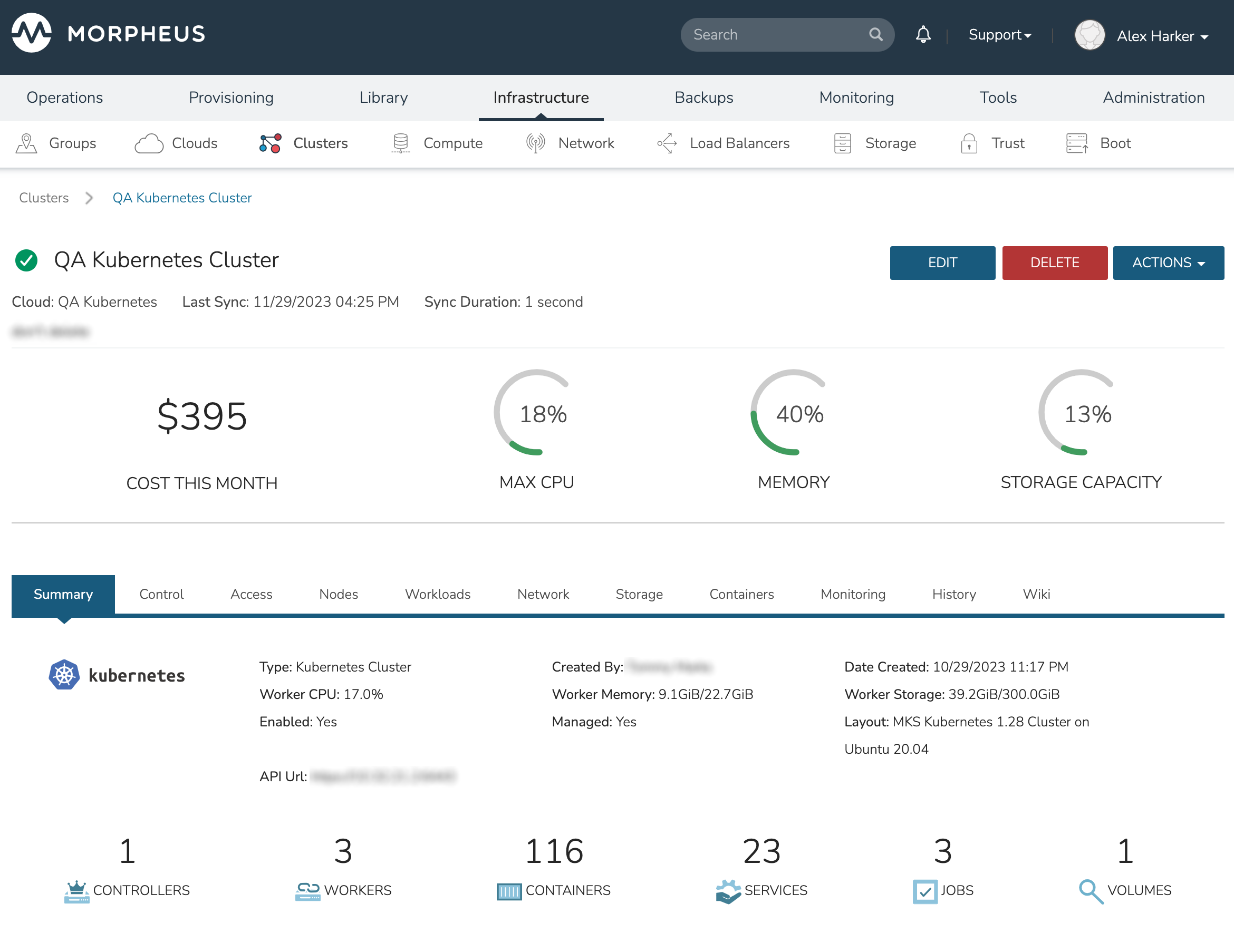

The Kubernetes Cluster Detail page provides a high degree of monitoring and control over Kubernetes Clusters. This includes monitoring of all nodes in the Cluster, kubectl command line, account and role control, workload management, and more. The upper section of the page (which is persistent regardless of the currently-selected tab) provides high level costing and monitoring information, including a current aggregate metric for the CPU, memory and storage use.

The upper section also includes the ACTIONS menu which includes the following functions:

REFRESH: Forces a routine sync of the cluster status

PERMISSIONS: View and edit the Group, Service Plan, and Tenant access permissions for the cluster

VIEW API TOKEN: Displays the API token for the cluster

VIEW KUBE CONFIG: Displays the cluster configuration

RUN WORKLOAD: Run deployments, stateful sets, daemon sets, or jobs and target them to a specific namespace

UPGRADE CLUSTER: Upgrade the cluster to a higher version of Kubernetes

ADD KUBERNETES WORKER: Launches a wizard which allows users to configure a new worker for the cluster

Additional monitoring and control panes are located within tabs, some of which contain subtabs.

The summary tab contains high-level details on health and makeup of the cluster.

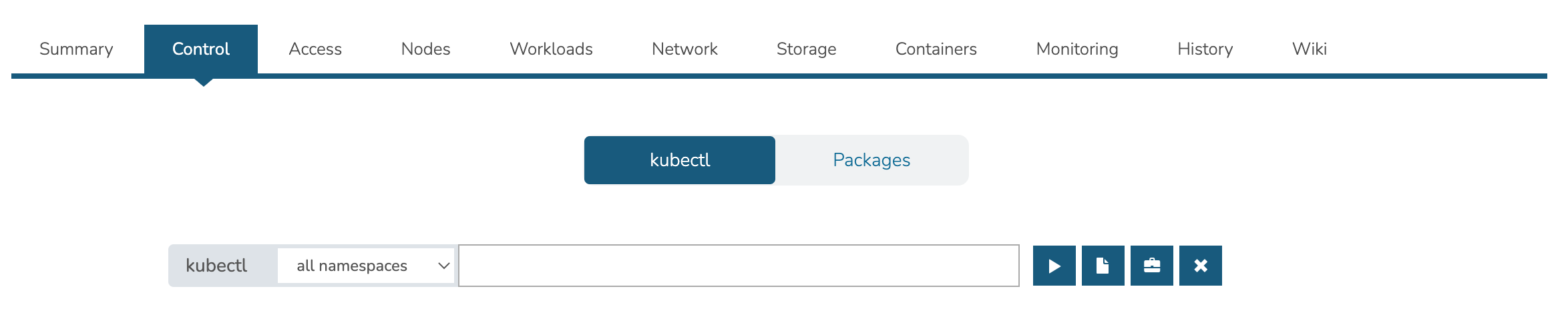

Contains the kubectl command line with ability to target commands to specific namespaces. The Control tab also contains the Packages subtab which displays the list of packages and their versions.

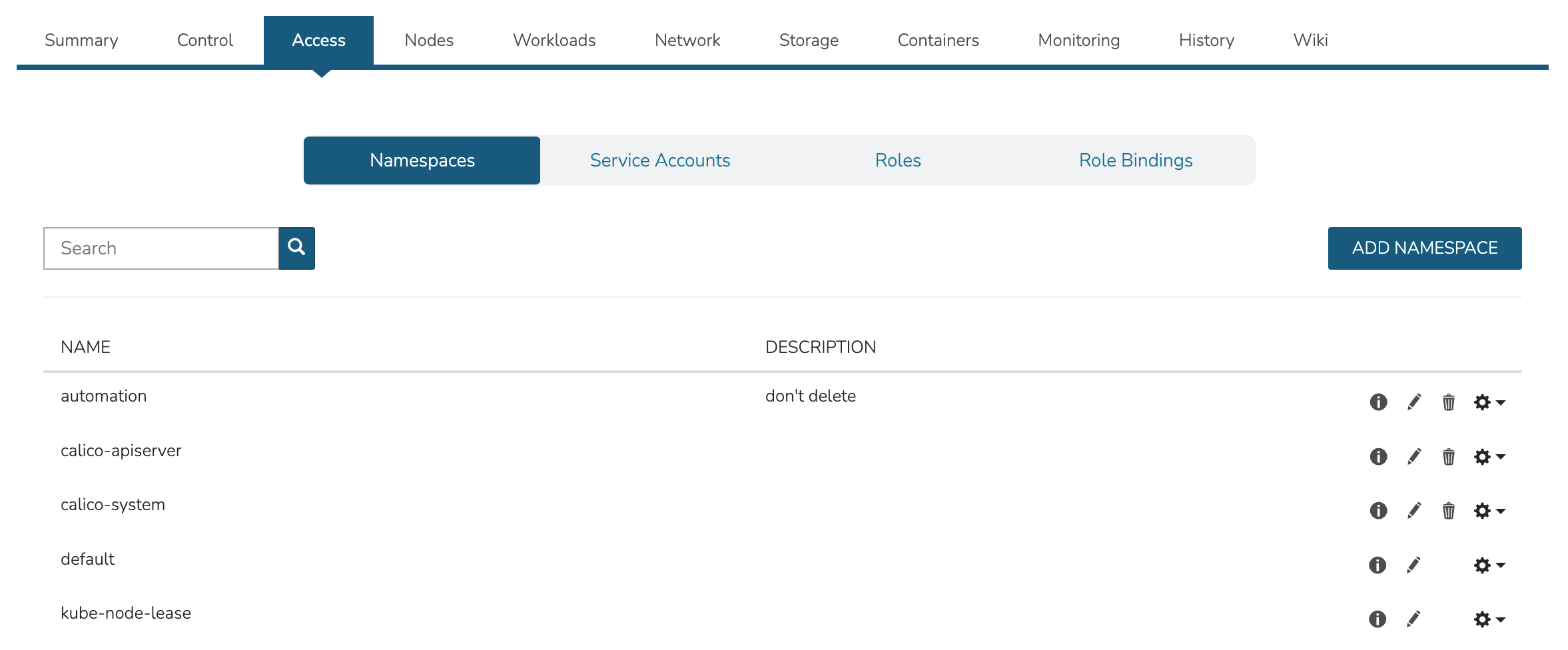

The Access Tab contains view and edit tools for Namespaces, accounts, roles, and role bindings.

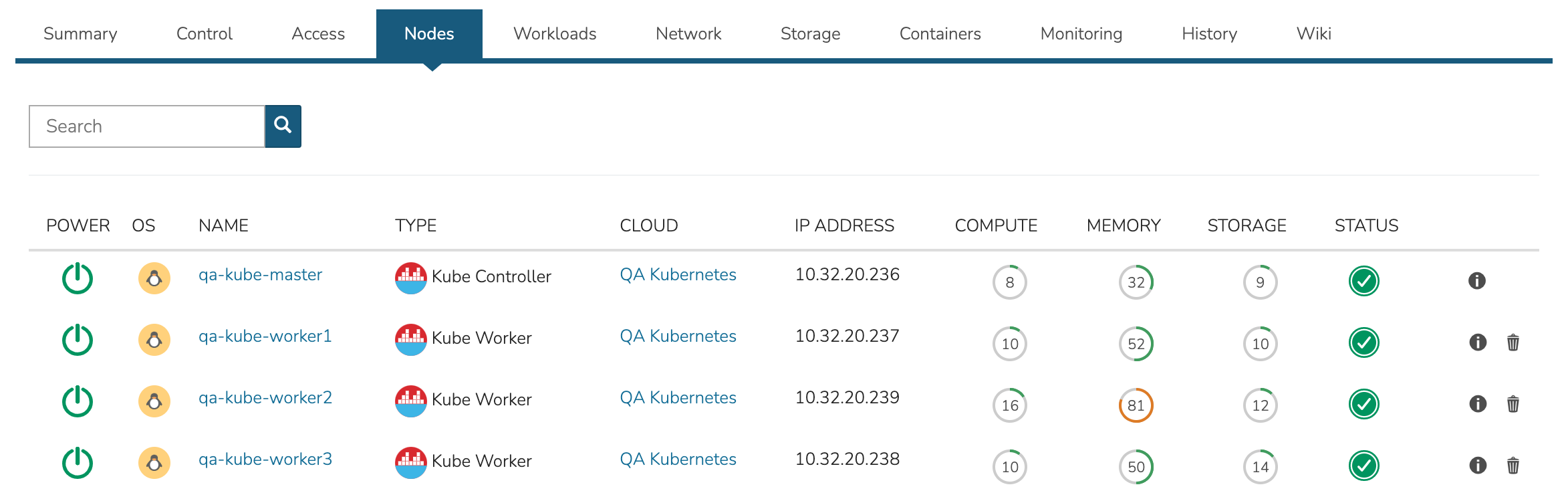

The nodes tab includes a list of master and worker nodes in the cluster, their statuses, and the current compute, memory, and storage pressure on each node.

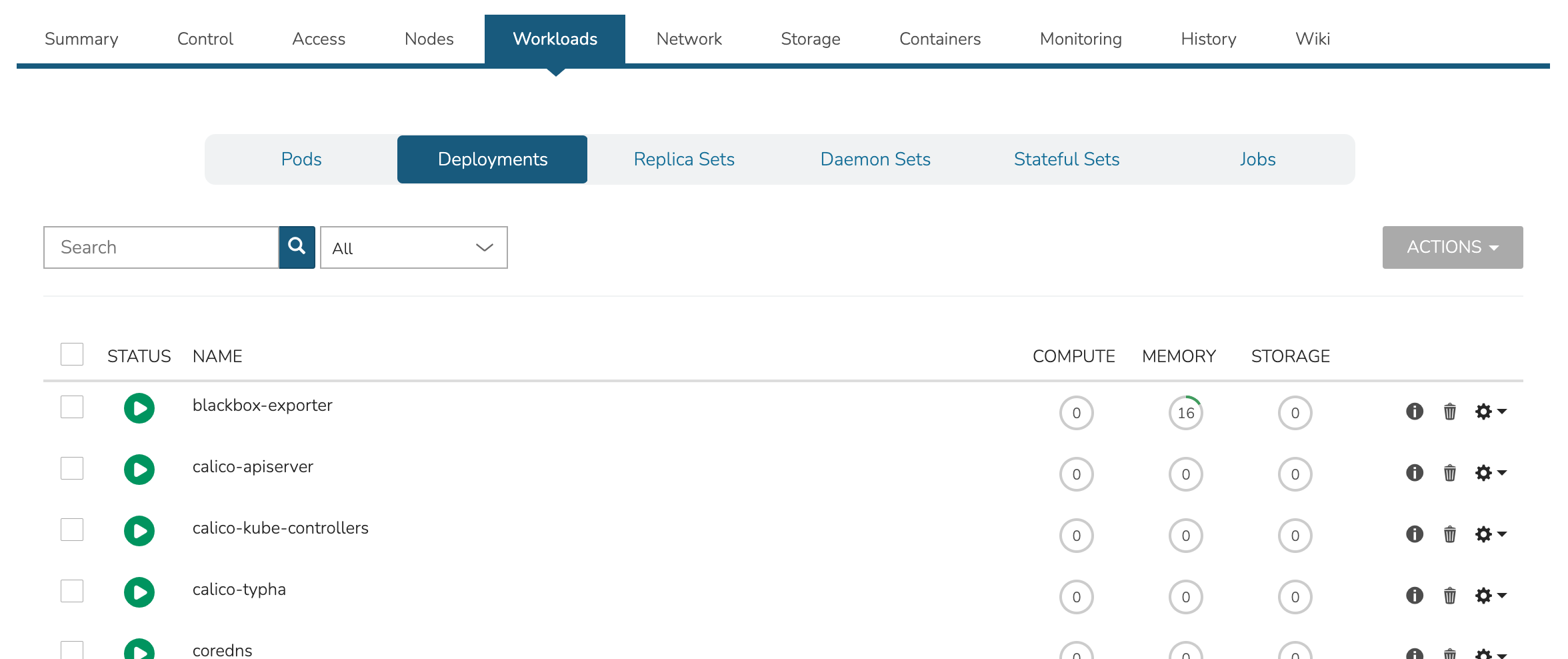

View and edit existing Pods, Deployments, Replica Sets, Daemon Sets, Stateful Sets, and Jobs. Add new Deployments, Stateful Sets, Daemon Sets, and Jobs through the ACTIONS menu near the top of the Cluster Detail Page.

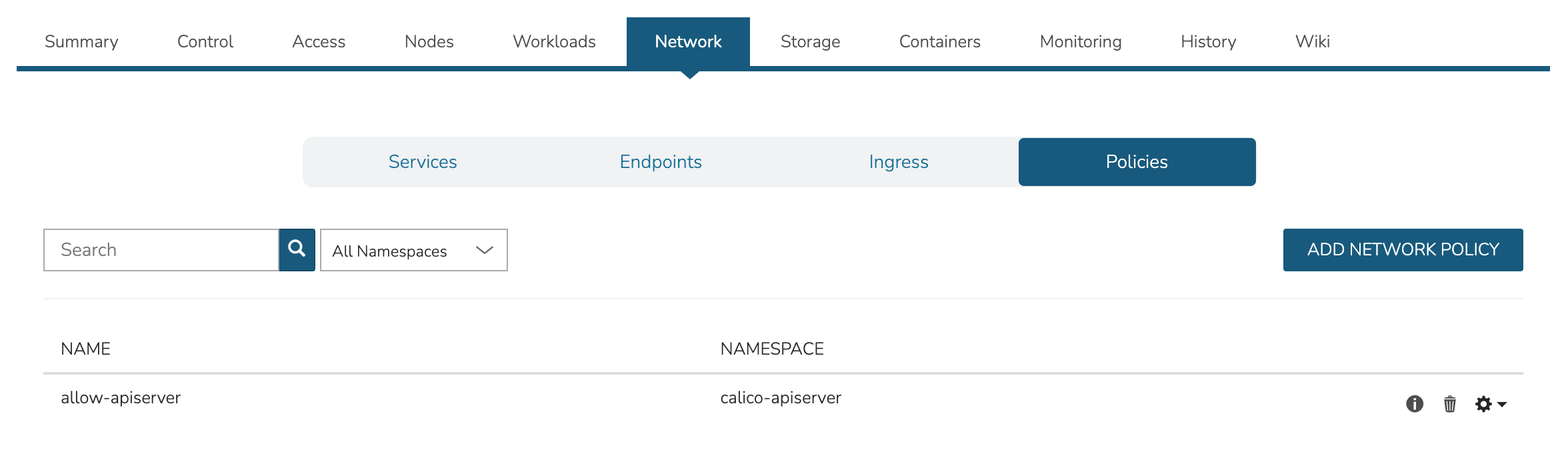

View, add, and edit Services, Endpoints, Ingress and Network Policies

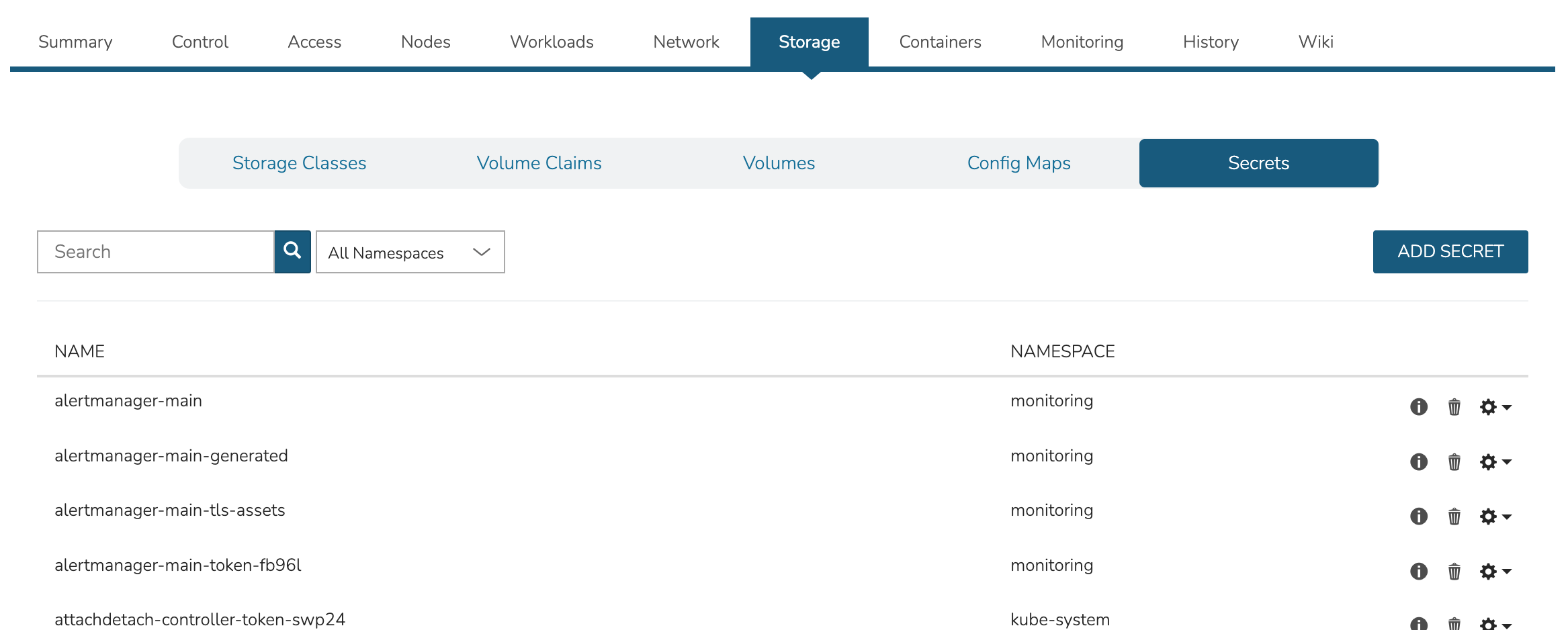

View, add, and edit Storage classes, Volume claims, Volumes, Config maps, and Secrets

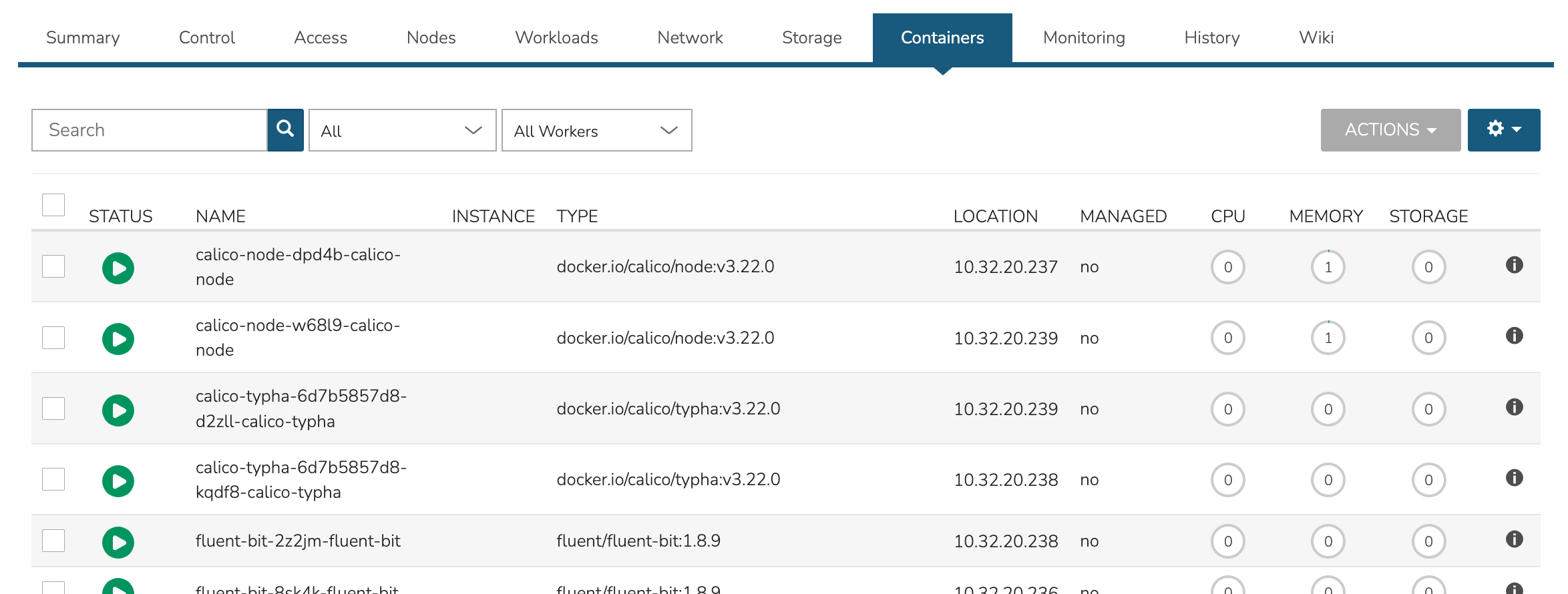

View a list of containers running on the cluster and restart or delete them if needed. This list can be filtered by Namespace or a specific Worker if desired.

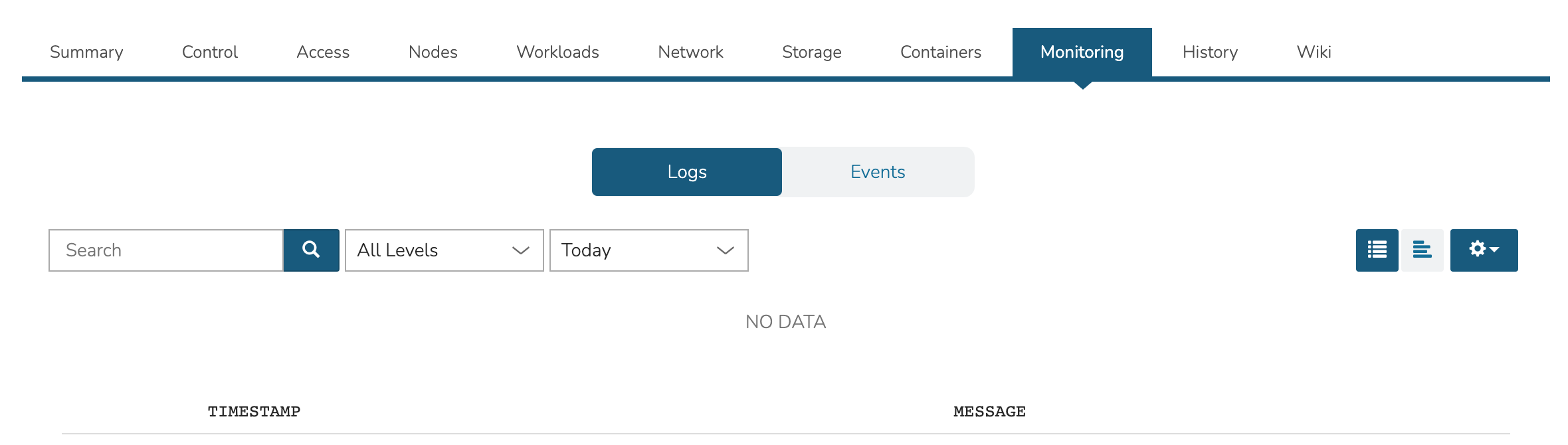

View logs and events with filtering tools and search functionality available.

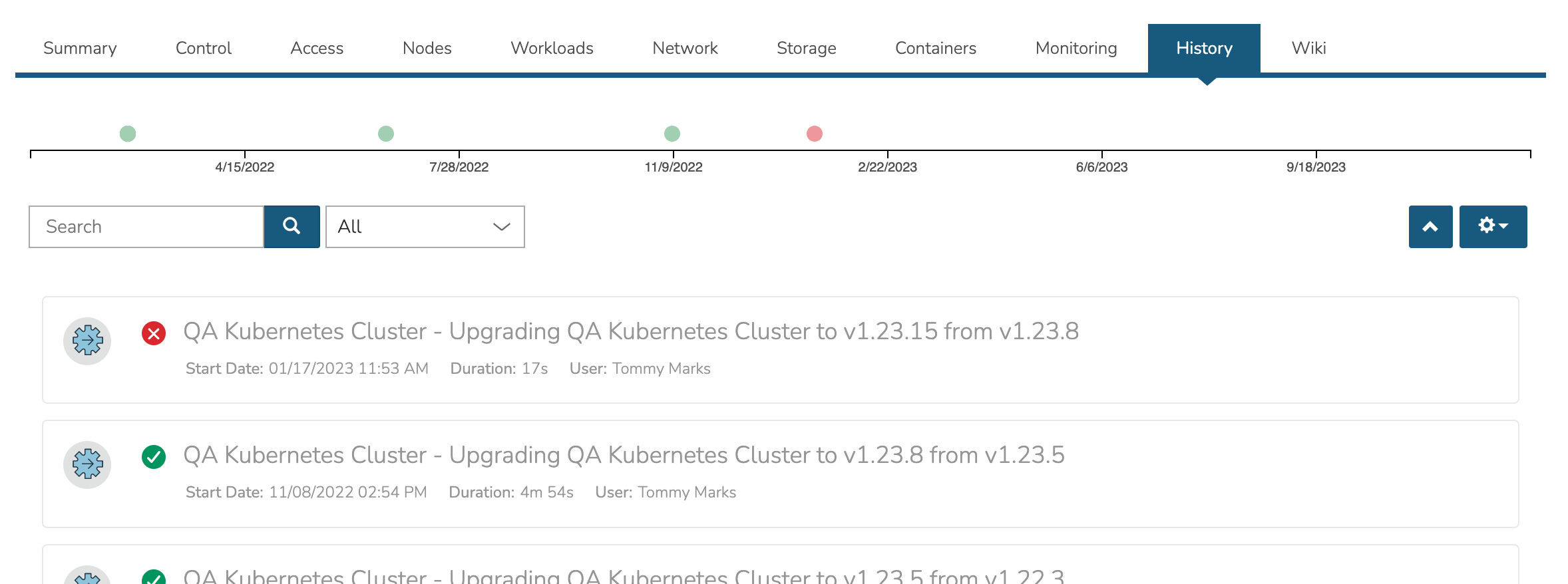

View the Cluster lifecycle history. This includes lists of automation packages (Tasks and Workflows) run against the cluster or its nodes, the success of these scripts and the output.

View the Morpheus Wiki entry for this Cluster. This Wiki page may also be viewed in the Wiki section (Operations > Wiki). Edit the Wiki as desired, most standard Markdown syntax will be honored allowing the use of headings, links, embedded images, and more.

Adding External Kubernetes Clusters¶

Morpheus supports the management and consumption of Kubernetes clusters provisioned outside of Morpheus. These are referred to as External Kubernetes Clusters in Morpheus UI. This could be used, for example, to onboard and manage OpenShift clusters. In order to fully integrate the Kubernetes cluster with the Morpheus feature set, you may need to create a service account for Morpheus. Without first taking that step, some features may not work fully, such as listing all namespaces. The process for creating a service account and integrating the Cluster with Morpheus is described here.

First, create the Service Account within the Kubernetes cluster:

kubectl create serviceaccount morpheus

Next, create the Role Binding:

kubectl create clusterrolebinding morpheus-admin \

--clusterrole=cluster-admin --serviceaccount=default:morpheus \

--namespace=default

With those items created, we can gather the API URL and the API token which will be used to add the existing cluster to Morpheus in the next step:

kubectl config view --minify | grep server | cut -f 2- -d ":" | tr -d " "

SECRET_NAME=$(kubectl get secrets | grep ^morpheus | cut -f1 -d ' ')

kubectl describe secret $SECRET_NAME | grep -E '^token' | cut -f2 -d':' | tr -d " "

After finishing those steps, we can now create the external cluster in Morpheus. Navigate to Infrastructure > Clusters. Click + ADD CLUSTER and then select “External Kubernetes Cluster”. Set the following fields, you will have to advance through the pages of the wizard to see all fields indicated:

GROUP: A previously created Morpheus Group

CLOUD: A previously-integrated Cloud

CLUSTER NAME: A friendly name for the onboarded cluster in Morpheus UI

RESOURCE NAME: The resource name will be pre-pended to Kubernetes hosts associated with this cluster when shown in Morpheus UI

LAYOUT: Set an associated Layout

API URL: Enter the API URL gathered in the previous step

API TOKEN: Enter the API Token gathered in the previous step

KUBE CONFIG: Enter Kubeconfig YAML to authenticate the cluster

The above are the required fields, others may be optionally configured depending on the situation. Complete the wizard and Morpheus will begin the process of onboarding the existing cluster into management within Morpheus UI. Once things are finalized and statuses are green, the cluster can be monitored and consumed as any other cluster provisioned from Morpheus.

Docker Clusters¶

Provisions a new Docker Cluster managed by Morpheus.

To create a new Docker Cluster:

Navigate to

Infrastructure > ClustersSelect + ADD CLUSTER

Select

Docker ClusterPopulate the following:

- CLOUD

Select target Cloud

- CLUSTER NAME

Name for the Docker Cluster

- RESOURCE NAME

Name for Docker Cluster resources

- DESCRIPTION

Description of the Cluster

- VISIBILITY

- Public

Available to all Tenants

- Private

Available to Master Tenant

- LABELS

Internal label(s)

Select NEXT

Populate the following (options depend on Cloud Selection and will vary):

- LAYOUT

Select from available layouts.

- PLAN

Select plan for Docker Host

- VOLUMES

Configure volumes for Docker Host

- NETWORKS

Select the network for Docker Master & Worker VM’s

- NUMBER OF HOSTS

Specify the number of hosts to be created

- User Config

- CREATE YOUR USER

Select to create your user on provisioned hosts (requires Linux user config in Morpheus User Profile)

- USER GROUP

Select User group to create users for all User Group members on provisioned hosts (requires Linux user config in Morpheus User Profile for all members of User Group)

- Advanced Options

- DOMAIN

Specify Domain for DNS records

- HOSTNAME

Set hostname (defaults to Instance name)

Select NEXT

Select optional Workflow to execute

Select NEXT

Review and select COMPLETE

EKS Clusters¶

Provisions a new Elastic Kubernetes Service (EKS) Cluster in target AWS Cloud.

Note

EKS Cluster provisioning is different than creating a Kubernetes Cluster type in AWS EC2, which creates EC2 instances and configures Kubernetes, outside of EKS.

Morpheus currently supports EKS in the following regions: us-east-2, us-east-1, us-west-1, us-west-2, af-south-1, ap-east-1, ap-south-1, ap-northeast-3, ap-northeast-2, ap-southeast-1, ap-southeast-2, ap-northeast-1, ca-central-1, eu-central-1, eu-west-1, eu-west-2, eu-south-1, eu-west-3, eu-north-1, me-south-1, sa-east-1, us-gov-east-1, us-gov-west-1

Create an EKS Cluster¶

Navigate to

Infrastructure - ClustersSelect + ADD CLUSTER

Select

EKS ClusterPopulate the following:

- LAYOUT

Select server layout for EKS Cluster

- PUBLIC IP

- Subnet Default

Use AWS configured Subnet setting for Public IP assignment

- Assigned EIP

Assigned Elastic IP to Controller and Worker Nodes. Requires available EIP’s

- CONTROLLER ROLE

Select Role for EKS Controller from synced role list

- CONTROLLER SUBNET

Select subnet placement for EKS Controller

- CONTROLLER SECURITY GROUP

Select Security Group assignment for EKS Controller

- WORKER SUBNET

Select Subnet placement for Worker Nodes

- WORKER SECURITY GROUP

Select Security Group assignment for Worker Nodes

- WORKER PLAN

Select Service Plan (EC2 Instance Type) for Worker Nodes

- User Config

- CREATE YOUR USER

Select to create your user on provisioned hosts (requires Linux user config in Morpheus User Profile)

- USER GROUP

Select User group to create users for all User Group members on provisioned hosts (requires Linux user config in Morpheus User Profile for all members of User Group)

- Advanced Options

- DOMAIN

Specify Domain for DNS records

- HOSTNAME

Set hostname (defaults to Instance name)

Select NEXT

Select optional Workflow to execute

Select NEXT

Review and select COMPLETE

GKE Clusters¶

Provisions a new Google Kubernetes Engine (GKE) Cluster in target Google Cloud.

Note

Ensure proper permissions exist for the Google Clouds service account to create, inventory and manage GKE clusters.

Create an GKE Cluster¶

Navigate to

Infrastructure - ClustersSelect + ADD CLUSTER

Select

GKE ClusterPopulate the following:

- CLOUD

Select target Cloud

- CLUSTER NAME

Name for the GKE Cluster

- RESOURCE NAME

Name for GKE Cluster resources/hosts

- DESCRIPTION

Description of the Cluster

- VISIBILITY

- Public

Available to all Tenants

- Private

Available to Master Tenant

- LABELS

Internal label(s)

- LAYOUT

Select cluster layout for GKE Cluster

- RESOURCE POOL

Specify an available Resource Pool from the selected Cloud

- GOOGLE ZONE

Specify Region for the cluster

- VOLUMES

Cluster hosts volume size and type

- NETWORKS

Select GCP subnet(s) and config

- WORKER PLAN

Service Plan for GKE worker nodes

- RELEASE CHANNEL

Regular, Rapid, Stable or Static

- CONTROL PLANE VERSION

Select from available synced GKE k8’s versions

- NUMBER OF WORKERS

Number of worker nodes to be provisioned

Select NEXT

Select optional Workflow to execute

Select NEXT

Review and select COMPLETE