Distributed Workers¶

Overview¶

The HPE Morpheus Enterprise distributed worker is installed using the same package as the VDI Gateway worker. Organizations which have already deployed VDI Gateway(s) can use the same worker for both purposes if desired, you’d simply need to update configuration in /etc/morpheus/morpheus-worker.rb and run a reconfigure. When creating a distributed worker or VDI Gateway object in HPE Morpheus Enterprise UI, an API key is generated. Adding one or both types of API keys to the worker configuration file determines if the worker is running in VDI gateway and/or distributed worker mode.

Supported Cloud Types

The following Cloud/Zone types support Distributed Workers

vmware

vmwareCloudAws

nutanix

openstack

xenserver

macstadium

Installation¶

A distributed worker VM is installed and configured similarly to a HPE Morpheus Enterprise appliance via rpm or deb package.

Note

Package URLs for the distributed worker are available at https://app.morpheushub.com in the downloads section.

Note

The distributed worker requires that the HPE Morpheus Enterprise appliance has a trusted SSL certificate. This can be accomplished by configuring a public trusted SSL certificate on the HPE Morpheus Enterprise appliance (or load balancer) or ensure the certificate and chain are added to the Java Keystore of the Distributed Worker to trust the certificate.

Requirements

OS |

Version(s) |

|---|---|

Amazon Linux |

2 |

CentOS |

7.x, 8.x |

Debian |

10, 11 |

RHEL |

7.x, 8.x |

SUSE SLES |

12 |

Ubuntu |

18.04, 20.04, 22.04 |

Memory: 4 GB RAM minimum recommended

Storage: 10 GB storage minimum recommended. Storage is required for installation packages and log files

CPU: 4-core minimum recommended

Network connectivity to the HPE Morpheus Enterprise appliance over TCP 443 (HTTPS)

Superuser privileges via the

sudocommand for the user installing the HPE Morpheus Enterprise worker packageAccess to base

yumoraptrepos. Access to Optional RPM repos may be required for RPM distros

Important

In order to proxy VMware vCenter Cloud traffic through a Distributed Worker, you must have a static public DNS entry for the internal IP address of the vCenter appliance. If this is not done, everything may appear to be working properly when configuring the Cloud but problems will arise at provision time. This is not a HPE Morpheus Enterprise limitation but is a limitation of the VMware SDK client which does not natively support proxies.

Download the appropriate package from HPE Morpheus Enterprise Hub based on your target Linux distribution and version for installation in a directory of your choosing. The package can be removed after successful installation.

wget https://downloads.morpheusdata.com/path/to/morpheus-worker-$version.distro

Validate the package checksum as compared with the values indicated on Hub. For example:

sha256sum morpheus-worker-$version.distro

Next, install the package using your selected distribution’s package installation command and your preferred options. Example, for RPM:

rpm:

$ sudo rpm -ihv morpheus-worker-$version.$distro

Preparing... ################################# [100%]

Updating / installing...

1:morpheus-worker-x.x.x-1.$distro ################################# [100%]

Thank you for installing Morpheus Worker!

Configure and start the Worker by running the following command:

sudo morpheus-worker-ctl reconfigure

Configuration¶

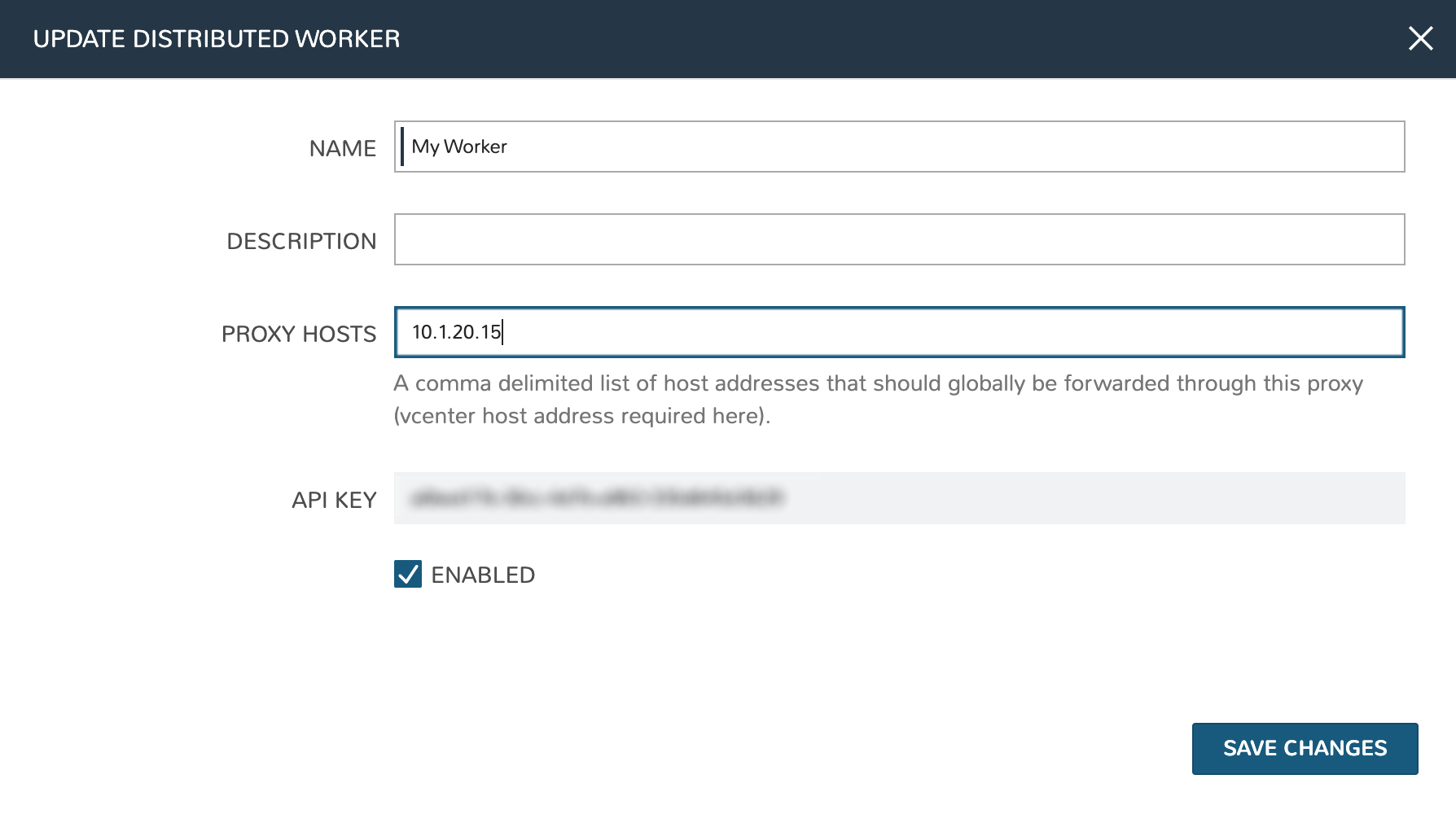

With the package installed, we need to add a new distributed worker in HPE Morpheus Enterprise UI. Distributed workers are added in Administration > Integrations > Distributed Workers. To create one, populate the following fields:

NAME: A name for the distributed worker in HPE Morpheus Enterprise

DESCRIPTION: An optional description for the distributed worker

PROXY HOSTS: A comma-delimited list of global proxy hosts, any endpoint listed here will be proxied through the HPE Morpheus Enterprise worker. For VMware, you must list the host addresses for any vCenter you wish to proxy through the worker. Xen hosts and PowerVC hosts must be listed here as well. Other Cloud types which are supported by the HPE Morpheus Enterprise worker need only have the worker configured on the Edit Cloud modal (Infrastructure > Clouds > Selected Cloud > Edit button)

ENABLED: When marked, the selected worker is available for use

Important

The proxy host URL entered in the Worker configuration must match the URL set in the Cloud configuration. That is, if you use the URL in the Cloud configuration you must also use it in the Worker configuration. The reverse is also true, if an IP address is used in the Cloud configuration, that should be used in the Worker configuration as well. There are also configuration considerations that must be made for proxying vCenter Cloud traffic through a Distributed Worker. See the “IMPORTANT” box in the “Requirements” section for additional details.

After clicking SAVE CHANGES, an API key is generated and displayed. Make note of this as it will be needed in a later configuration step.

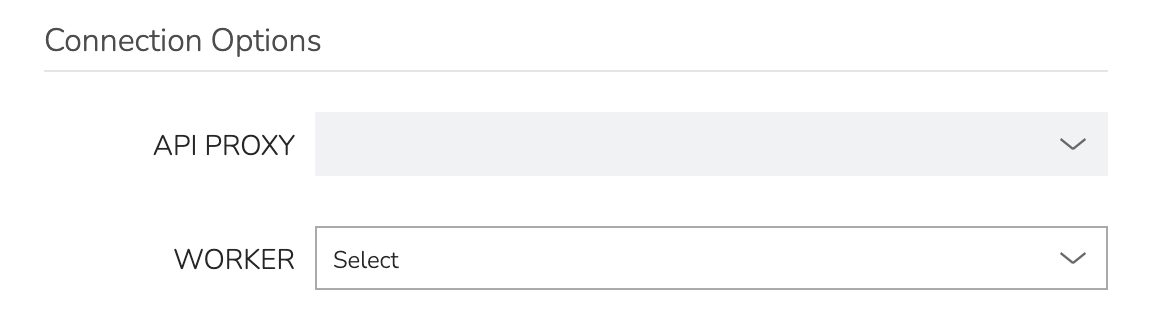

With the worker configured in HPE Morpheus Enterprise, the next step is to update supported Cloud integrations which should be proxied through the worker. Select the desired Cloud from the Clouds List Page (Infrastructure > Clouds) and click EDIT from the chosen Cloud’s Detail Page. Within the Connection Options section, choose a configured worker from the WORKER dropdown menu. Click SAVE CHANGES.

With the API key in hand and configuration complete in HPE Morpheus Enterprise UI, head back to the worker box. Configure the gateway by editing /etc/morpheus/morpheus-worker.rb and updating the following:

worker_url = 'https://gateway_worker_url' # This is the worker URL the Morpheus appliance can resolve and reach on 443 worker['appliance_url'] = 'https://morpheus_appliance_url' # The resolvable URL or IP address of Morpheus appliance which the worker can reach on port 443 worker['apikey'] = 'API KEY FOR THIS GATEWAY' # VDI Gateway API Key generated from Morpheus Appliance VDI Pools > VDI Gateways configuration. For worker only mode, a value is still required but can be any value, including the 'API KEY FOR THIS GATEWAY' default template value worker['worker_key'] = 'DISTRIBUTED WORKER KEY' # Distributed Worker API Key from Administration > Integrations > Distributed Workers configuration worker['proxy_address'] = 'http://proxy.address:1234' # For environments in which the worker must go through a proxy to communicate with the Morpheus appliance or other resources, configure the address worker['no_proxy'] = 'vcenter.example.com,192.168.xx.xx' # A comma-separated list of resources that should be accessed directly and not through the proxy

Note

By default the worker_url uses the machine’s hostname, ie https://your_machine_name. The default worker_url value can be changed by editing /etc/morpheus/morpheus-worker.rb and changing the value of worker_url. Additional appliance configuration options are available below.

After all configuration options have been set, run sudo morpheus-worker-ctl reconfigure to install and configure the worker, nginx and guacd services:

sudo morpheus-worker-ctl reconfigure

The worker reconfigure process will install and configure the worker, nginx and guacd services and dependencies.

Tip

If the reconfigure process fails due to a missing dependency, add the repo that the missing dependency can be found in and run

Note

Configuration options can be updated after the initial reconfigure by editing /etc/morpheus/morpheus-worker.rb and running sudo morpheus-worker-ctl reconfigure again.

Once the installation is complete the morpheus worker service will automatically start and open a web socket with the specified HPE Morpheus Enterprise appliance. To monitor the startup process, run morpheus-worker-ctl tail to tail the logs of the worker, nginx and guacd services. Individual services can be tailed by specifying the service, for example morpheus-worker-ctl tail worker

Highly-Available (HA) Deployment¶

If desired, multiple distributed worker nodes may be associated to the same HPE Morpheus Enterprise appliance to eliminate a single point of failure should a distributed worker node go down. Configure each distributed worker node using the same worker key (process described in the prior section) and add redundancy using as many additional workers nodes as needed. When multiple worker nodes are using the same worker key, proxy calls will always go through the primary worker node when possible. The primary node is the first worker node configured using a specific worker key. When necessary, automatic failover will take place and another active worker node will be used. While proxy calls will always try to use the primary node when available, HPE Morpheus Enterprise Agent communications can be balanced equally across worker nodes by placing a VIP in front of your distributed workers.