Automation¶

Library > Automation

The Automation section is composed of Tasks and Workflows. Tasks can be scripts added directly, scripts and blueprints from the Library section, recipes, playbooks, puppet agent installs, or http (api) calls. These Tasks are are combined into workflows, which can be selected to run at provision time or executed on existing instances via Actions > Run Workflow.

Tasks¶

Overview¶

There are many Task Types available, including scripts added directly, scripts and templates from the Library section, recipes, playbooks, puppet agent installs, and http (api) calls. Tasks are primarily created for use in Workflows, but a single Task can be executed on an existing instance via Actions > Run Task.

Role Permissions¶

The User Role Permission ‘Provisioning: Tasks FULL’ is required to create, edit and delete tasks.

Tasks Types that can execute locally against the HPE Morpheus Enterprise Appliance have an additional Role Permission: Tasks - Script Engines. Script Engine Task Types will be hidden for users without Tasks - Script Engines role permissions.

Common Options¶

When creating a Task, the required and optional inputs will vary significantly by the Task type. However, there are options which are common to Tasks of all types.

Target Options¶

When creating a Task, users can select a target to perform the execution. Some Task types allow for any of the three execution targets listed below and some will limit the user to two or just one. The table in the next section lists the available execution targets for each Task type.

Resource: A HPE Morpheus Enterprise-managed Instance or server is selected to execute the Task

Local: The Task is executed by the HPE Morpheus Enterprise appliance node

Remote: The user specifies a remote box which will execute the Task

Execute Options¶

Continue on Error: When marked, Workflows containing this Task will continue and will remain in a successful state if this Task fails

Retryable: When marked, this Task can be configured to be retried in the event of failure

Retry Count: The maximum number of times the Task will be retried when there is a failure

Retry Delay: The length of time (in seconds) HPE Morpheus Enterprise will wait to retry the Task

Allow Custom Config: When marked, a text area is provided at Task execution time to allow the user to pass extra variables or specify extra configuration. See the next section for an example.

Source Options¶

Task configuration code may be entered in a number of ways depending on the Task type. Changing the SOURCE type will often update the available fields in the Task modal to accommodate the selected sourcing. Not every Task type supports every sourcing type listed here.

Local: The Task configuration code is written directly in HPE Morpheus Enterprise in a large text area. HPE Morpheus Enterprise includes syntax highlighting for supported Task languages for easier debugging and script writing

Repository: Source the Task configuration code from an integrated Git or Github repository. This requires a pre-existing integration with a Github or other Git-based repository. See the relevant integration guides for full details on creating such an integration. Specify the path to the appropriate file through the WORKING PATH field. The appropriate branch may also be specified (if a branch other than ‘main’ is required) in the BRANCH/TAG field. To reference a tag from this field, use the following syntax:

refs/tags/<tag-name-here>. Unless otherwise specified, Task config is sourced fresh from the repository each time the Task is invoked which ensures the latest code is always usedURL: For Task configuration that can be source via an outside URL, specify the address in the URL field

Allow Custom Config¶

When “Allow Custom Config” is marked on a Task, the user is shown a text area for custom configuration when the Task is executed manually from the Tasks List Page. If the Task is to be part of an Operational Workflow, mark the same box on the Workflow rather than on the Task to see the text area at execution time. This text area is inside the “Advanced Options” section, which must be expanded in order to reveal the text area. Within the text area, add a JSON map of key-value pairs which can be resolved within your automation scripts. This could be used to pass extra variables that aren’t always needed in the script or for specifying extra configuration.

Example JSON Map:

{"key1": "value1",

"key2": "value2",

"os": "linux",

"foo": "bar"}

When the Task is executed, these extra variables would be resolved where called into the script such as in the following simple BASH script example:

echo "<%=customOptions.os%>"

echo "<%=customOptions.foo%>"

The above example would result in the following output:

linux

bar

Task Types¶

Task Type |

Task Description |

Source Options |

Execute Target Options |

Configuration Requirements |

Role Permissions Requirements |

|

|---|---|---|---|---|---|---|

|

Ansible |

Runs an Ansible playbook. Ansible Integration required |

Ansible Repo (Git) |

Local, Resource |

Existing Ansible Integration |

Library: Tasks |

|

Ansible Tower |

Relays Ansible calls to Ansible Tower |

Tower Integration |

Local, Remote, Resource |

Existing Ansible Tower Integration |

Library: Tasks |

|

Chef bootstrap |

Executes Chef bootstrap and run list. Chef Integration required |

Chef Server |

Resource |

Existing Chef Integration |

Library: Tasks |

Conditional Workflow |

Allows the user to set JavaScript logic. If it resolves to |

N/A (JavaScript logic must be locally sourced, Tasks housed within the associated Workflows may have different sourcing options depending on their types.) |

Local |

Existing Operational Workflows |

Library: Tasks |

|

|

Send an email from a Workflow |

Task Content |

Local |

SMTP Configured |

Library: Tasks |

|

|

Groovy script |

Executes Groovy Script locally (on HPE Morpheus Enterprise app node) |

Local, Repository, Url |

Local |

None |

Library: Tasks, Tasks - Script Engines |

|

HTTP |

Executes REST call for targeting external API’s. |

Local |

Local |

None |

Library: Tasks |

|

Javascript |

Executes Javascript locally (on HPE Morpheus Enterprise app node) |

Local |

Local |

None |

Library: Tasks, Tasks - Script Engines |

|

jRuby Scirpt |

Executes Ruby script locally (on HPE Morpheus Enterprise app node) |

Local, Repository, Url |

Local |

None |

Library: Tasks, Tasks - Script Engines |

|

Library Script |

Creates a Task from an existing Library Script (Library > Templates > Script Templates) |

Library Script |

Resource |

Existing Library Script |

Library: Tasks |

|

Library Template |

Creates a Task from an existing Library Template (Library > Templates > Spec Templates) |

Library Template |

Resource |

Existing Library Templates |

Library: Tasks |

Nested Workflow |

Embeds a Workflow into a Task which allows the Workflow to be nested within other Workflows for situations when common Task sets are frequently used in Workflows |

N/A |

Local |

N/A |

Library: Tasks |

|

|

PowerShell Script |

Execute PowerShell Script on the Target Resource |

Local, Repository, Url |

Remote, Resource, Local |

None |

Library: Tasks |

|

Puppet Agent Install |

Executes Puppet Agent bootstrap, writes |

Puppet Master |

Resource |

Existing Puppet Integration |

Library: Tasks |

|

Python Script |

Executes Python Script locally |

Local, Repository, Url |

Local |

|

Library: Tasks, Tasks - Script Engines |

|

Restart |

Restarts target VM/Host/Container and confirms startup status before executing next task in Workflow |

System |

Resource |

None |

Library: Tasks |

|

Shell Script |

Executes Bash script on the target resource |

Local, Repository, Url |

Local, Remote, Resource |

None |

Library: Tasks |

|

vRealize Orchestrator Workflow |

Executes vRO Workflow on the Target Resource |

vRO Integration |

Local, Resource |

Existing vRO Integration |

Library: Tasks |

|

Write Attributes |

Add arbitrary values to the Attributes map of the target resource |

N/A |

Local |

Provide map of values as valid JSON |

Library: Tasks |

Task Configuration¶

Ansible Playbook

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

ANSIBLE REPO: Select existing Ansible Integration

GIT REF: Specify tag or branch (Option, blank assumes default)

PLAYBOOK: Name of playbook to execute, both

playbookandplaybook.ymlformat supportedTAGS: Enter comma separated tags to filter executed tasks by (ie

--tags)SKIP TAGS: Enter comma separated tags to run the playbook without matching tagged tasks (ie

--skip-tags)

Important

Using different Git Refs for multiple Ansible Tasks in same Workflow is not supported. Git Refs can vary between Workflows, but Tasks in each Workflow must use the same Git Ref.

Ansible Tower Job

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

TOWER INTEGRATION: Select an existing Ansible Tower integration

INVENTORY: Select an existing Inventory, when bootstrapping an Instance, HPE Morpheus Enterprise will add the Instance to the Inventory

GROUP: Enter a group name, when bootstrapping an Instance, HPE Morpheus Enterprise will add the Instance to the Group if it exists. If it does not exist, HPE Morpheus Enterprise will create the Group

JOB TEMPLATE: Select an existing job template to associate with the Task

SCM OVERRIDE: If needed, specify an SCM branch other than that specified on the template

EXECUTE MODE: Select Limit to Instance (template is executed only on Instance provisioned), Limit to Group (template is executed on all hosts in the Group), Run for all (template is executed on all hosts in the Inventory), or Skip Execution (to skip execution of the template on the Instance provisioned)

Chef bootstrap

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

CHEF SERVER: Select existing Chef integration

ENVIRONMENT: Populate Chef environment, or leave as

_defaultRUN LIST: Enter Run List, eg

role[web]DATA BAG KEY: Enter data bag key (will be masked upon save)

DATA BAG KEY PATH: Enter data bag key path, eg

/etc/chef/databag_secretNODE NAME: Defaults to Instance name, configurable

NODE ATTRIBUTES: Specify attributes inside the

{}

Conditional Workflow

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

LABELS: A comma separated list of Labels for organizational purposes. See elsewhere in HPE Morpheus Enterprise docs for additional details on utilizing Labels

CONDITIONAL (JS): JavaScript logic which determines the Operational Workflow which is ultimately run. If it resolves to

true, the “If” Workflow is run and if it resolves tofalsethe “Else” Workflow is runIF OPERATIONAL WORKFLOW: Set the Operational Workflow which should be run if the JavaScript conditional resolves to

trueELSE OPERATIONAL WORKFLOW: Set the Operational Workflow which should be run if the JavaScript conditional resolves to

false

Groovy script

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

CONTENT: Contents of the Groovy script if not sourcing it from a repository

Email

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

SOURCE: Choose local to draft or paste the email directly into the Task. Choose Repository or URL to bring in a template from a Git repository or another outside source

EMAIL ADDRESS: Email addresses can be entered literally or HPE Morpheus Enterprise automation variables can be injected, such as

<%=instance.createdByEmail%>SUBJECT: The subject line of the email, HPE Morpheus Enterprise automation variables can be injected into the subject field

CONTENT: The body of the email is HTML. HPE Morpheus Enterprise automation variables can be injected into the email body when needed

SKIP WRAPPED EMAIL TEMPLATE: The HPE Morpheus Enterprise-styled email template is ignored and only HTML in the Content field is used

Tip

To whitelabel email sent from Tasks, select SKIP WRAPPED EMAIL TEMPLATE and use an HTML template with your own CSS styling

HTTP (API)

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

URL: An HTTP or HTTPS URL as the HTTP Task target

HTTP METHOD: GET (default), POST, PUT, PATCH, HEAD, or DELETE

AUTH USER: Username for username/password authentication

PASSWORD: Password for username/password authentication

BODY: Request Body

HTTP HEADERS: Enter requests headers, examples below:

Authorization

Bearer token

Content-Type

application/json

IGNORE SSL ERRORS: Mark when making REST calls to systems without a trusted SSL certificate

Javascript

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

SCRIPT: Javascript contents to execute

jRuby Script

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

CONTENT: Contents of the jRuby script is entered here if it’s not being called in from an outside source

Library Script

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

SCRIPT: Search for an existing script in the typeahead field

Library Template

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

TEMPLATE: Search for an existing template in the typeahead field

Nested Workflow

Powershell Script

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

VERSION: Select the version of Powershell this Task should run in. Powershell 5 is the default selection, Powershell 6 or 7 must be installed on the target to select those versions

ELEVATED SHELL: Run script with administrator privileges

IP ADDRESS: IP address of the PowerShell Task target

PORT: SSH port for PowerShell Task target (5985 default)

USERNAME: Username for PowerShell Task target

PASSWORD: Password for PowerShell Task target

Content: Enter script to execute if not calling the script in from an outside source

Note

Setting the execution target to local requires Powershell to be installed on the HPE Morpheus Enterprise appliance box(es). Microsoft Documentation contains installation instructions for all major Linux distributions and versions.

Puppet Agent Install

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

PUPPET MASTER: Select Puppet Master from an existing Puppet integration

PUPPET NODE NAME: Enter Puppet node name. Variables supported eg.

<%= instance.name %>PUPPET ENVIRONMENT: Enter Puppet environment, eg.

production

Python Script

Important

Python Tasks use virtual environments. For this reason,

virtualenvmust be installed on your appliances in order to work with Python Tasks. See the information below for more detailed steps to installvirtualenvon your HPE Morpheus Enterprise appliance node(s).NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

CONTENT: Python script to execute is entered here if not pulled in from an outside repository

COMMAND ARGUMENTS: Optional arguments passed into the Python script. Variables supported eg.

<%= instance.name %>ADDITIONAL PACKAGES: Additional packages to be installed after

requirements.txt(if detected). Expected format for additional packages: ‘packageName==x.x.x packageName2==x.x.x’, the version must be specifiedPYTHON BINARY: Optional binary to override the default Python binary

Note

When troubleshooting errors in Python scripts using line numbers reported in HPE Morpheus Enterprise UI (such as in Task execution histories), you must subtract four line numbers to arrive at the correct line in the Python document. This is because HPE Morpheus Enterprise injects four lines at execution time to add HPE Morpheus Enterprise environment variables.

Enterprise Proxy Considerations

Additional considerations must be made in enterprise proxy environments where Python Tasks are run with additional package download requirements. These additional packages are downloaded using

pipand may not obey global HPE Morpheus Enterprise proxy rules. To deal with this, create or edit the pip configuration file at/etc/pip.conf. Your configuration should include something like the following:[global] proxy = http://some-proxy-ip.com:8087

For more information, review the Pip documentation on using proxy servers here.

CentOS 7 / Python 2.7 (RHEL system Python)

With a fresh install of HPE Morpheus Enterprise on a default build of CentOS 7, Python Tasks will not function due to the missing requirement of

virtualenv.If you attempt to run a python task, you will get an error similar to the following:

Task Execution Failed on Attempt 1 sudo: /tmp/py-8ae51ebf-749c-4354-b6e4-11ce541afad5/bin/python: command not found

In order to run HPE Morpheus Enterprise Python Tasks in CentOS 7, install

virtualenv:yum install python-virtualenvIf you require

python3, you can specify the binary to be used while building the virtual environment. In a default install, do the following:yum install python3. Then, in your HPE Morpheus Enterprise Python Task, specify the binary in the PYTHON BINARY field as “/bin/python3”. This will build a virtual environment in/tmpusing thepython3binary, which is equivalent to making a virtual environment like so:virtualenv ~/venv -p /bin/python3.If you wish to install additional Python packages into the virtual environment, put them in

pipformat and space-separated into the ADDITIONAL PACKAGES field on the Python Task. Use the help text below the field to ensure correct formatting.CentOS 8 and Python

In CentOS 8, Python is not installed by default. There is a

platform-pythonbut that should not be used for anything in userland. The error message with a default install of CentOS 8 will be similar to this:Task Execution Failed on Attempt 1 sudo: /tmp/py-cffc9a8f-c40d-451d-956e-d6e9185ade33/bin/python: command not found

The default

virtualenvfor CentOS 8 is the python3 variety, for HPE Morpheus Enterprise to use Python Tasks, do the following:yum install python3-virtualenvIf Python2 is required, do the following:

yum install python2and specify/bin/python2as the PYTHON BINARY in your HPE Morpheus Enterprise Task.This will build a

virtualenvin/tmpusing thepython2binary, which is equivalent to making avirtualenvlike so:virtualenv ~/venv -p /bin/python2If you wish to install additional Python packages into the virtual environment, put them in

pipformat and space-separated into the ADDITIONAL PACKAGES field on the Python Task. Use the help text below the field to ensure correct formatting.Restart

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

Shell Script

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

SUDO: Mark the box to run the script as

sudoCONTENT: Script to execute is entered here if not pulled in from an outside repository

Tip

When the EXECUTE TARGET option is set to “Local” (in other words, the Task is run on the appliance itself), two additional fields are revealed: GIT REPO and GIT REF. Use GIT REPO to set the PWD shell variable (identifies the current working directory) to the locally cached repository (ex. /var/opt/morpheus-node/morpheus-local/repo/git/76fecffdf1fe96516e90becdab9de) and GIT REF to identify the Git branch the Task should be run from if the default (typically main or master) shouldn’t be used. If these options are not set, the working folder will be /opt/morpheus/lib/tomcat/temp which would not allow scripts to reference file paths relative to the repository (if needed).

vRealize Orchestrator Workflow

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

RESULT TYPE: Single Value, Key/Value Pairs, or JSON

vRO INTEGRATION: Select an existing vRO integration

WORKFLOW: Select a vRO workflow from the list synced from the selected integration

PARAMETER BODY (JSON):

Write Attributes

NAME: Name of the Task

CODE: Unique code name for API, CLI, and variable references

ATTRIBUTES: A JSON map of arbitrary values to write to the attributes property of the target resource

Tip

This is often useful for storing values from one phase of a Provisioning Workflow for access in another phase. See the video demo below for a complete example.

There are a number of ways that a JSON payload can be statically drafted within a Write Attributes Task or called into the Task as a result from a prior Task. Consider the following examples:

To pass in a static JSON map with static values, use the format shown below.

{ "my_key1": "my_value1", "my_key2": "my_value2" }

To pass in a static JSON map with dynamic values seeded from prior Task results, ensure the RESULT TYPE value of one or more of the prior Tasks in the Workflow phase is set to “Single Value” and refer to the values within the JSON map as shown in the next example. Note that “taskCode1” and “taskCode2” refer to the CODE field value for the Task whose output you wish to reference.

{ "my_key1": "<%=results.taskCode1%>", "my_key2": "<%=results.taskCode2%>" }

To pass in a dynamic JSON map returned from a prior Task, format your Write Attributes Task as shown in the next example. Ensure that the RESULT TYPE value for the Task returning a JSON map is set to “JSON”. Note that “taskCode” in the example refers to the CODE field value for the Task being referenced. In order for the JSON map to be set correctly and able to be referenced from future Tasks, you must set the “instances” key and call the

encodeAsJSON()Groovy method as shown in the example.{ "instances": <%=results.taskCode?.encodeAsJSON()%> }

Task Management¶

Adding Tasks¶

Select Automation from within the Library menu

On the Tasks tab, click the Add button

From the New Task Wizard input a name for the task

Select the type of task from from the type dropdown

Input the appropriate configuration details. These will vary signficiantly based on the selected Task type. More details on each Task type are contained in the preceding sections.

Once done, click SAVE CHANGES

Tip

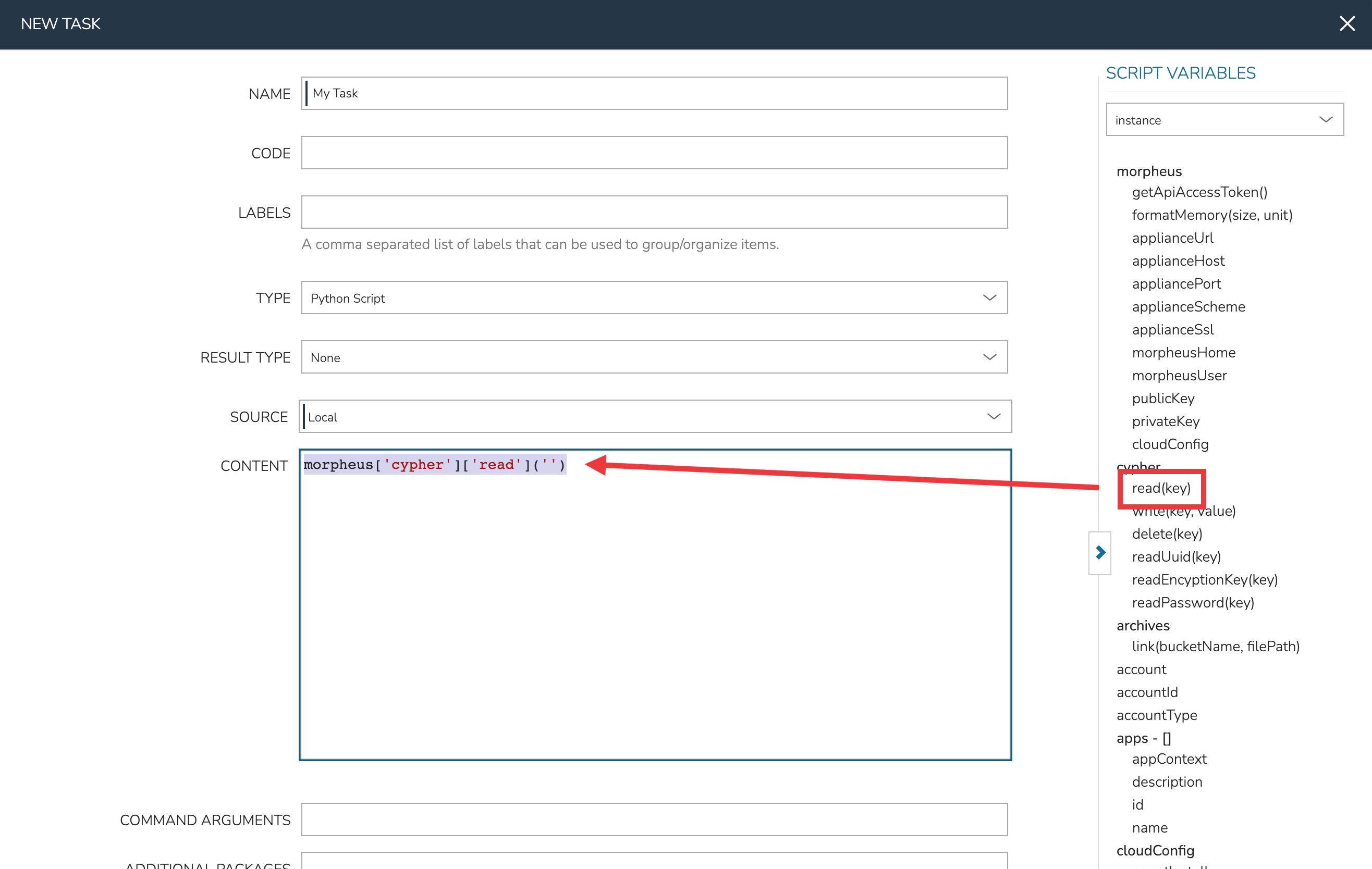

When writing a Task config, it’s often necessary to reference HPE Morpheus Enterprise variables which pertain to the specific Instance the Task is being run against. HPE Morpheus Enterprise includes a pop-out column along the right side of the Add/Edit Task modal which lists available variables. Click and drag the relevant variable into the config area and HPE Morpheus Enterprise will automatically fill in the variable call formatted for the currently chosen Task type. See the screenshot below.

Editing Tasks¶

Select Automation from within the Library menu

Click the pencil icon (✎) on the row of the task you wish to edit

Modify Task as needed

Once done, click SAVE CHANGES

Deleting Tasks¶

Select Automation from within the Library menu

Click the trash icon (🗑) on the row of the Task you wish to delete

Task Results¶

Overview¶

Using Task results, the output from any preceding Tasks within the same Workflow phase is available to be called into additional Tasks. The results are stored on the results variable. Since results are available to all Tasks, we can use results from any or all prior Tasks so long as they are executed within the same provision phase.

In script type tasks, if a RESULT TYPE is set, HPE Morpheus Enterprise will store the output on the results variable. It’s important to understand that the result type indicates the format of the Task output HPE Morpheus Enterprise should expect. HPE Morpheus Enterprise will parse that output into a Groovy map which can be retrieved and further parsed by resolving the results variable. If the RESULT TYPE is incorrectly set, HPE Morpheus Enterprise may not be able to store the Task results correctly. Jump to the section on Script Config Examples to see how script results are processed in various example cases.

Results Types¶

- Single Value

Entire task output is stored in

<%=results.taskCode%>or<%=results["Task Name"]%>variable.

- Key/Value pairs

Expects

key=value,key=valueoutput. Entire task output is available with<%=results.taskCode%>or<%=results["Task Name"]%>variable (output inside[]). Individual Values are available with<%=results.taskCode.key%>variables.

- JSON

Expects

key:value,key:valuejson formatted output. Entire task output is available with<%=results.taskCode%>or<%=results["Task Name"]%>variable (output inside[]). Individual Values are available with<%=results.taskCode.key%>variables.

Important

The entire output of a script is treated as results, not just the last line. Ensure formatting is correct for the appropriate result type. For example, if Results Type is json and the output is not fully json compatible, the result would not return properly.

Important

Task results are not supported for Library Script task types

Script Config Examples¶

- Single Value using Task Code

- Source Task Config

NAME: Var Code (single)

CODE: singleExample

RESULT TYPE: Single Value

SCRIPT:

echo "string value"

Source Task Output:

string value- Results Task Config (using task code in variable)

NAME: N/A

CODE: N/A

RESULT TYPE: N/A

SCRIPT:

echo "single: <%=results.singleExample%>"

Results Task Output:

single: string value- Single Value using Task Name

- Source Task Config

- NAME

Var Code

- CODE

none

- RESULT TYPE

Single Value

- SCRIPT

echo "string value"

- Source Task Output

string value- Results Task using task name in variable

- Results Task Script

echo "task name: <%=results["Var Code"]%>"- Results Task Output

task name: test value

- Key/Value Pairs

- Source Task Config

- NAME

Var Code (keyval)

- CODE

keyvalExample

- RESULT TYPE

Key/Value pairs

- SCRIPT

echo "flash=bang,ping=pong"

- Source Task Output

flash=bang,ping=pong- Results Task for all results

- Results Task Script

echo "keyval: <%=results.keyvalExample%>"- Results Task Output

keyval: [flash:bang, ping:pong]

- Results Task for a single value)

- Results Task Script

echo "keyval value: <%=results.keyvalExample.flash%>"- Results Task Output

keyval value: bang

- JSON

- Source Task Config

- NAME

Var Code (json)

- CODE

jsonExample

- RESULT TYPE

JSON

- SCRIPT

echo "{\"ping\":\"pong\",\"flash\":\"bang\"}"

- Source Task Output

{"ping":"pong","flash":"bang"}- Results Task for all results

- Results Task Script

echo "json: <%=results.jsonExample%>"- Results Task Output

json: [ping:pong, flash:bang]

- Results Task for a single value

- Results Task Script

echo "json value: <%=results.jsonExample.ping%>"- Results Task Output

json value: pong

- Multiple Task Results

- Results Task Script

echo "single: <%=results.singleExample%>" echo "task name: <%=results["Var Code"]%>" echo "keyval: <%=results.keyvalExample%>" echo "keyval value: <%=results.keyval.flash%>" echo "json: <%=results.jsonExample%>" echo "json value: <%=results.jsonExample.ping%>"

- Results Task Output

single: string value task name: string value keyval: [flash:bang, ping:pong] keyval value: bang json: [ping:pong, flash:bang] json value: pong

Workflow Config¶

Add one or multiple tasks with Results Type configured to a workflow, and the results will be available to all tasks in the same phase of the workflow via the <%=results.variables%> during the workflow execution.

Task Results are only available to tasks in the same workflow phase

Task Results are only available during workflow execution

Workflows¶

Workflows are groups of Tasks, which are described in detail in the preceding section. Operational Workflows can be run on-demand against an existing Instance or server from the Actions menu on the Instance or server detail page. Additionally, they can be scheduled to run on a recurring basis through Morpheus Jobs (Provisioning > Jobs).

Provisioning Workflows are associated with Instances at provision time (in the Automation tab of the Add Instance wizard) or after provisioning through the Actions menu on the Instance detail page. Provisioning Workflows assign Tasks to various stages of the Instance lifecycle, such as Provision, Post Provision, and Teardown. When the Instance reaches a given stage, the appropriate Tasks are run. Task results and output can be viewed from the History tab of the Instance or server detail page.

Provisioning Workflow Execution Phases¶

Phase |

Description |

Usage Example |

Notes |

|---|---|---|---|

Configuration |

Tasks are run prior to initial calls to the specified cloud API to initiate provisioning |

Call to an external platform to dynamically generate a hostname prior to kicking off provisioning or dynamically altering configuration of a Catalog Item prior to provisioning |

|

Price |

Price Phase Tasks are only invoked when the Workflow is tied to a Layout. Like the Configuration Phase, these Tasks are run prior to any calls made to the target Cloud API and allow pricing data to be overridden for the Workload being provisioned. A “spec” variable containing Instance config is passed into the Task and a specific return payload is expected in order to work properly. Any other pricing (such as on the Service Plan) is overridden. See the section below for a detailed example of this Phase being used. |

An MSP customer calling out to a custom pricing API to deliver Instance pricing to their own customers. |

See the section below on Price Phase implementation for a detailed setup example. |

Pre Provision |

For VMs, Tasks are run after the VM is running and prior to any Tasks in the Provision phase. For containers, Tasks in this phase are run on the Docker host and prior to |

Prepare a Docker host to run containers |

Pre Provision can be used for a Blueprint so it is added before a script which is set at the Provision phase executes. Pre Provision for scripts is mainly for Docker as you can execute on the host before the container is running. |

Provision |

Like pre-provision, Tasks for VMs are run after the VM is running. For containers, these Tasks are run on the containers once they are running on the host. For many users, this is the most commonly-used phase. |

Join the server to a domain |

Tasks included with in the Provision phase are considered to be vital to the health of the Instance. If a Task in the Provision phase fails, the Workflow will fail and the Instance provisioning will also fail. Tasks not considered to be vital to the existence of the Instance should go in the Post Provision phase where their failure will not constitute failure of the Instance. |

Post Provision |

Tasks are run after the entire provisioning process has completed |

Disable UAC or Windows Firewall on a Windows box or join Active Directory |

When adding a node to an Instance, Tasks in this phase will be run on all nodes in the Instance after the new node is provisioned. This is because Post Provision operations may need to affect all nodes, such as when joining a new node to a cluster. Tasks in Pre Provision and Provision phases would only be run on the new node in this scenario. |

Start Service |

Tasks in this phase are intended to start the service associated with the Instance type. |

Include a script to start the service associated with the Instance (such as MySQL) which will execute when the Start Service action is selected from the Instance detail page |

Start services is manually run from the Instance detail page and is designed to refer to the service the Instance provides. |

Stop Service |

Tasks in this phase intended to stop the service associated with the Instance type. |

Include a script to stop the service associated with the Instance (such as MySQL) which will execute when the Stop Service action is selected from the Instance detail page |

Stop services is manually run from the Instance detail page and is designed to refer to the service the Instance provides. |

Pre Deploy |

Tasks in this phase are run when a new deploy is triggered from the Deploy tab of the Instance detail page, prior to the deploy taking place. |

Extract files from a deploy folder and move them to their final positions prior to deploy |

Deployments are manually triggered from the Instance detail page and are designed to refer to deployment of services, like a website or database. |

Deploy |

Tasks in this phase are run when a new deploy is triggered from the Deploy tab of the Instance detail page, after the deploy has completed |

Update configuration files or inject connection details from the environment at completion of the deploy process |

Deployments are manually triggered from the Instance detail page and are designed to refer to deployment of services, like a website or database. |

Reconfigure |

Tasks in this phase are run when the reconfigure action is made against an Instance or host |

Rescan or restart the Instance after a disk is added |

|

Teardown |

Tasks are run during VM or container destroy |

Remove Active Directory objects prior to tearing down the Instance |

|

Scale Down |

Tasks are run when a node is removed from an Instance. This does not apply to Clusters when a worker node is removed. |

||

Shutdown |

Tasks are run immediately before the target is shutdown |

Send an update on Instance power state to a CMDB |

|

Startup |

Tasks are run immediately before the target is started |

Send an update on Instance power state to a CMDB |

Add Workflow¶

Select the Library link in the navigation bar

Select Automation from the sub-navigation menu

Click the Workflows tab to show the Workflows tab panel

Click the + Add dropdown and select a Workflow type (Operational or Provisioning, see the section above for more on Workflow type differences)

From the New Workflow Wizard input a name for the workflow

Optionally input a description and a target platform

Add Tasks and Inputs using the typeahead fields, Tasks must be added to the appropriate phases for Provisioning Workflows

If multiple tasks are added to the same execution phase, their execution order can be changed by selecting the grip icon and dragging the task to the desired execution order

For multi-Tenant environments, select Public or Private visibility for the Workflow

For Operational Workflows, optionally mark “Allow Custom Config” from the Advanced Options section if needed. See the next section for more on this selection

Click the SAVE CHANGES button to save

Note

When setting Workflow visibility to Public in a multi-Tenant environment, Tenants will be able to see the Workflow and also execute it directly from the Workflows list (if it’s an Operational Workflow). They will not be able to edit or delete the Workflow.

Allow Custom Config¶

When “Allow Custom Config” is marked on Operational Workflows, the user is shown a text area for custom configuration at execution time. This text area is inside the “Advanced Options” section, which must be expanded in order to reveal the text area. Within the text area, add a JSON map of key-value pairs which can be resolved within your automation scripts. This could be used to pass extra variables that aren’t always needed in the script or for specifying extra configuration.

Example JSON Map:

{"key1": "value1",

"key2": "value2",

"os": "linux",

"foo": "bar"}

When the Workflow is executed, these extra variables would be resolved where called into the script such as in the following simple BASH script example:

echo "<%=customOptions.os%>"

echo "<%=customOptions.foo%>"

The above example would result in the following output:

linux

bar

Retrying Workflows¶

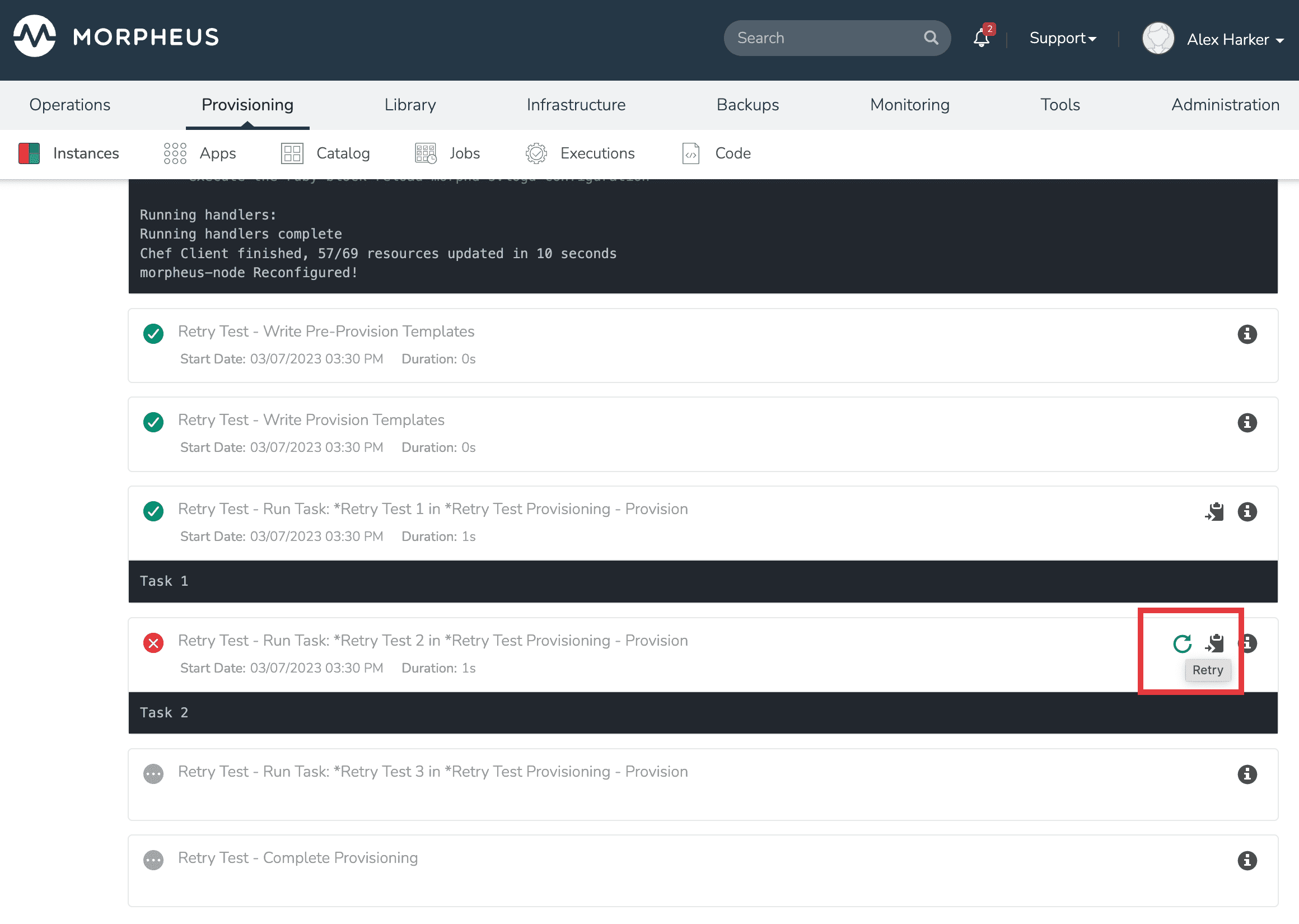

When a Workflow fails, HPE Morpheus Enterprise allows users to retry from the failed Task. Access the Workflow execution from the executions list page (Provisioning > Executions), from the Executions tab of the Workflow detail page (Library > Automation > Workflows > Selected Workflow), or from the History tab on the Instance detail page (Provisioning > Instances > Selected Instance). From the execution, select the Retry button which looks like a clockwise circular arrow and is highlighted in the screenshot below. This can be very useful as it allows you to resume what could potentially be a very long running Workflow from a point of failure without needing to start from the beginning. Similarly, if a provisioned Instance is in a failed state due to a failure in an attached Workflow (such as a failed Task in the Provision phase of an attached Provisioning Workflow), the user can opt to resume the Tasks from the failure point after making a correction and restore the Instance to a successfully-provisioned state.

Retry Workflow Tasks Video Demo

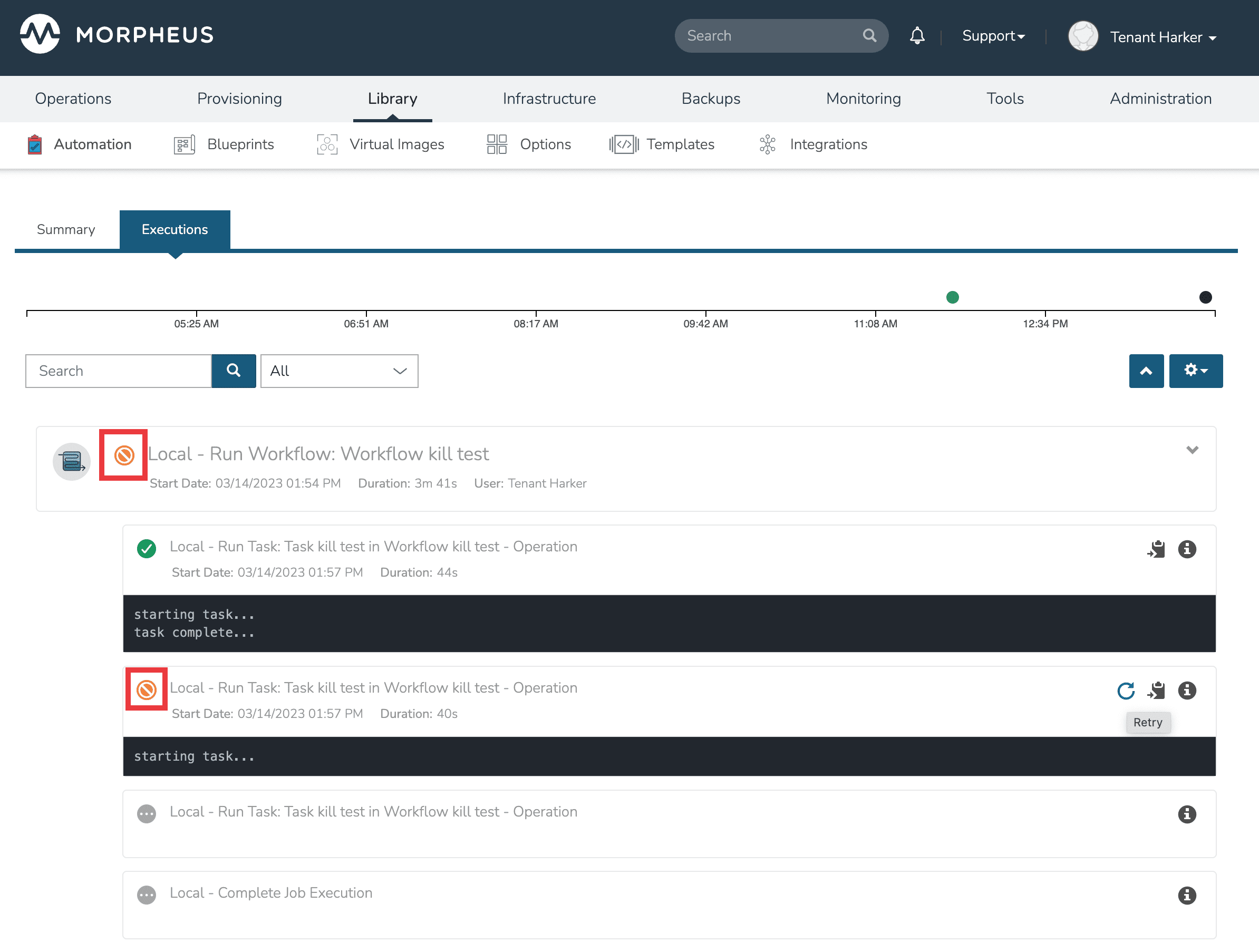

Cancelling Workflow Tasks¶

When a Workflow is running, HPE Morpheus Enterprise offers the capability of cancelling a Task and stopping any subsequent Tasks from starting. When viewed later in History or Executions, this leaves the Task and Workflow in a cancelled state. This is useful if you have a very long-running Task that you know will fail and wish to cancel or if you want to prevent a “retryable” Task from running again.

To cancel a Workflow, open the execution. Within the running Task will be a cancel button, click the button to interrupt the Workflow. Once cancelled, see that the Workflow and Task are now considered to be in a cancelled state which is shown in the UI. At this point the cancel button becomes a retry button and the Task could be resumed if desired.

Note

Cancelling a Task doesn’t actually interrupt the process already running on the workloads themselves. It simply interrupts the Workflow and stops it from continuing. Behind the scenes, HPE Morpheus Enterprise allows the running process to complete or time out rather than risk corrupting data with a non-graceful interrupt.

Price Phase Task Utilization¶

Price Phase Tasks Video Demo

Price Phase Tasks allow computed pricing for any workload in any Cloud (even public Clouds) to be overridden based on custom logic designed by the user. The variable “spec” is fed into the Task which represents the Instance configuration. The Task can be designed to use the Instance config data and compute an appropriate price for the Instance. HPE Morpheus Enterprise expects a return payload in the format below for the price override to work correctly. If used, pricing computed via Task replaces any other costing data which would have been applied to the workload (such as pricing based on the Service Plan). The user will see price estimates based on the Price Phase Task in the Instance provisioning wizard where the Service Plan pricing would otherwise be shown. Additionally, since Workflows which invoke Price Phase Tasks are tied to the Layout, the user can see different pricing depending on which Instance Type Layout is selected.

Note

Price Phase Tasks are only invoked if the Workflow is tied to a Layout.

The return payload should be a JSON array of “priceData” objects. priceData objects should contain values for each of the keys in the table below:

Key |

Description |

Data Type |

Possible Values |

|---|---|---|---|

incurCharges |

Indicates the Instance state when this charge should be applied |

String |

Running: Charge is incurred while the workload is in a running state, Stopped: Charge is incurred while the workload is in a stopped or shutdown state, Always: This charge is always applied. Some charges may apply simultaneously, for example, “Always” and “Running” states will apply while the workload is running. |

currency |

Indicates the currency in which the charge will be applied |

String |

Enter any three-letter currency code which HPE Morpheus Enterprise supports for its pricing, such as “USD”, “CAD”, or “GBP” |

unit |

Indicates the time interval at which the charge is applied |

String |

Enter “minute”, “hour”, “day”, “month”, or “year” |

cost |

Indicates the amount applied as cost for each configured time unit interval that passes. This is the cost to you, not the price with markup which the customer would see. |

Number |

A numerical amount such as “3.00” or “34.23” |

price |

Indicates the amount applied as price for each configured time unit interval that passes. This is the price to the customer with any built-in markup you need to apply. |

Number |

A numerical amount such as “3.00” or “34.23” |

A number of different Task types could be used in this phase. As long as the Task is returning the required JSON array, the Task will work correctly. Below is an example using a Groovy Task. This is simply outputting a static payload though in a real world scenario you’d likely use Task logic to output a dynamic array based on the Instance configuration.

def rtn = [

priceData: [

[

incurCharges: 'always',

currency: 'USD',

unit: 'hour',

cost: 2.0,

price: 2.0

],

[

incurCharges: 'running',

currency: 'USD',

unit: 'hour',

cost: 3.0,

price: 3.0

],

[

incurCharges: 'stopped',

currency: 'USD',

unit: 'hour',

cost: 1.0,

price: 1.0

]

]

]

return rtn

Nesting Workflows¶

HPE Morpheus Enterprise allows Workflows to be nested for easier Workflow creation when many Workflows are used in an environment which have only slight differences or which are made up of common pieces. Nestable Workflows are created like any other Operational Workflow. Once the Workflow is saved, it can be embedded into a special Task type called “Nested Workflow.” A Nested Workflow-type Task simply references an Operational Workflow which may need to be used within other Workflows. Once Nested Workflow Tasks are created they can be used as part of any new Operational or Provisioning Workflows that are created thereafter (or may be added to existing Workflows too). For more on creating Tasks, see HPE Morpheus Enterprise Task documentation.

Note

Results from prior Tasks are still accessed using the same syntax even when a prior Task is embedded in a Nested Workflow. Additional syntax to reference the Nested Workflow Task or the Workflow itself are not needed. See HPE Morpheus Enterprise Task documentation for more on chaining Task results.

Nested Workflows Video Demo

Edit Workflow¶

Select the Library link in the navigation bar.

Select Automation from the sub-navigation menu.

Click the Workflows tab to show the workflows tab panel.

Click the Edit icon on the row of the workflow you wish to edit.

Modify information as needed.

Click the Save Changes button to save.

Delete Workflow¶

Select the Library link in the navigation bar.

Select Automation from the sub-navigation menu.

Click the Workflows tab to show the workflows tab panel.

Click the Delete icon on the row of the workflow you wish to delete.

Scale Thresholds¶

Scale Thresholds are pre-configured settings for auto-scaling Instances. When adding auto-scaling to an instance, existing Scale Thresholds can be selected to determine auto-scaling rules.

Creating Scale Thresholds¶

Navigate to Library > Automation > Scale Thresholds

Select + ADD

Populate the following:

- NAME

Name of the Scale Threshold

- AUTO UPSCALE

Enable to automatically upscale per Scale Threshold specifications

- AUTO DOWNSCALE

Enable to automatically downscale per Scale Threshold specifications

- MIN COUNT

Minimum node count for Instance. Auto-scaling will not downscale below MIN COUNT, and will auto upscale if the MIN COUNT is not met)

- MAX COUNT

Maximum node count for Instance. Auto-scaling will not upscale past MAX COUNT, and will auto downscale if MAX COUNT is exceeded.

- ENABLE MEMORY THRESHOLD

Check to set auto-scaling by specified memory utilization threshold (%)

- MIN MEMORY

Enter MIN MEMORY % for triggering downscaling.

- MAX MEMORY

Enter MAX MEMORY % for triggering upscaling.

- ENABLE DISK THRESHOLD

Check to set auto-scaling by specified disk utilization threshold (%)

- MIN DISK

Enter MIN DISK % for triggering downscaling.

- MAX DISK

Enter MAX DISK % for triggering upscaling.

- ENABLE CPU THRESHOLD

Check to set auto-scaling by specified overall CPU utilization threshold (%)

- MIN CPU

Enter MIN CPU % for triggering downscaling.

- MAX CPU

Enter MAX CPU % for triggering upscaling.

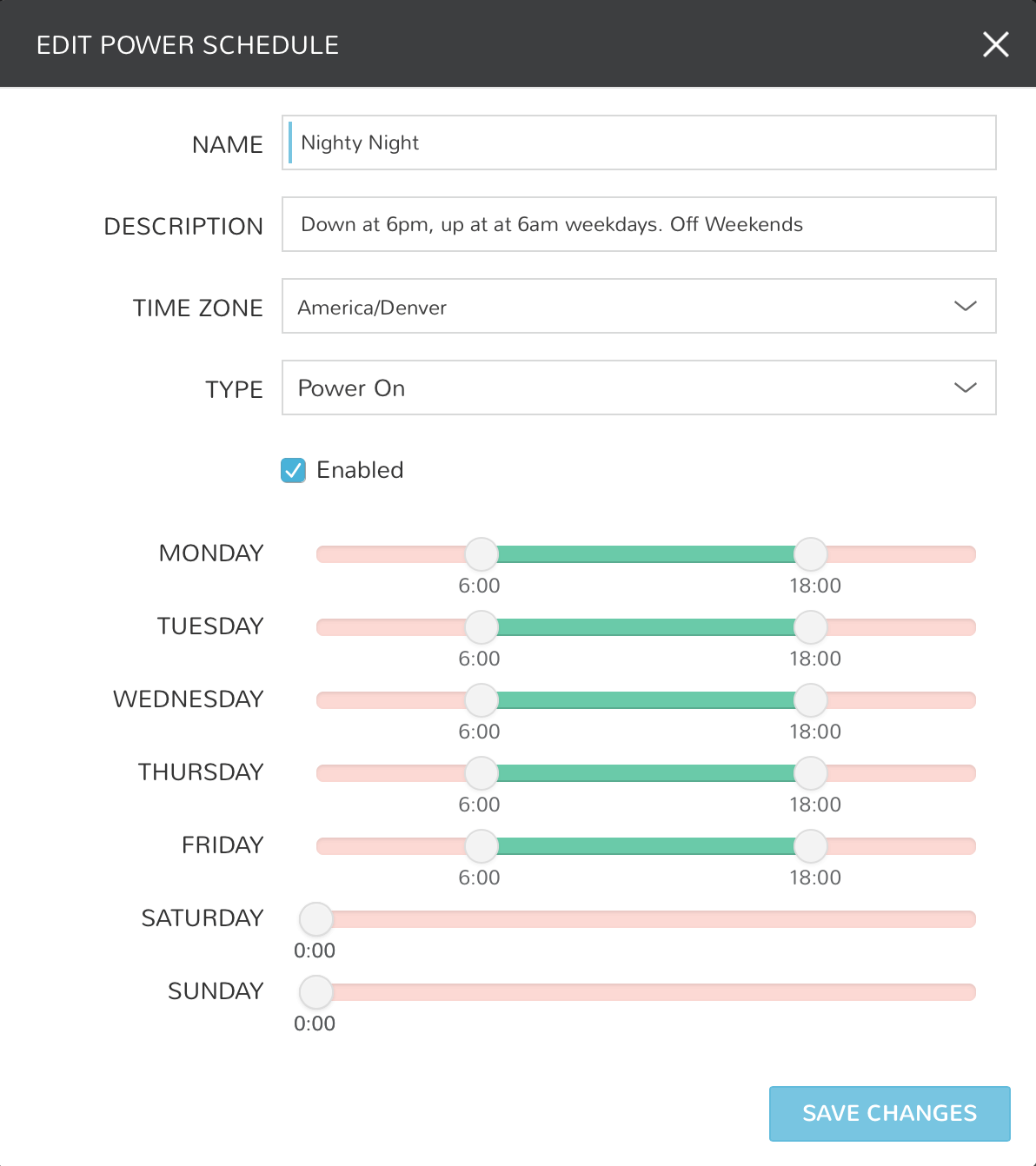

Power Scheduling¶

Set weekly schedules for shutdown and startup times for Instances and VM’s, apply Power Schedules to Instances pre or post-provisioning, apply Power Schedule policies on Group or Clouds, or use Guidance to automatically recommend and apply optimized Power Schedules.

Create Power schedules¶

Navigate to Library > Automation > Power Scheduling

Select + ADD

Configure the following options:

- NAME

Name of the Power Schedule

- DESCRIPTION

Description for the Power Schedule

- TIME ZONE

Time Zone the Power Schedule times correlate to.

- TYPE

- Power On

Power Up and then Down at scheduled times

- Power off

Power Down then Up at scheduled times

- Enabled

Check for Power Schedule to be Active. Uncheck to disable Power Schedule.

- DAYS

Slide the start and end time controls for each day to configure each days Schedule. Green sections indicate Power on, red sections indicate Power Off. Time indicated applies to selected Time Zone. The sliders can be used to set time in 15-minute steps, for single-minute granularity click the pencil icon and enter a specific time down to the minute.

Select SAVE CHANGES

Tip

To view the Instances a power schedule is currently set on, select the name of a Power Schedule to go to the Power Schedule Detail Page.

Add Power Schedule to Instance¶

Navigate to Provisioning > Instances

Select an Instance

Select EDIT

In the POWER SCHEDULE dropdown, select a Power Schedule.

Select SAVE CHANGES

Add Power Schedule to Virtual Machine¶

Navigate to

Infrastructure > Compute > Virtual MachinesSelect a Virtual Machine

Select EDIT

Expand the Advanced Options section

In the POWER SCHEDULE dropdown, select a Power Schedule.

Select SAVE CHANGES

Add Power Schedule Policy¶

Note

Power Schedule Policies apply to Instances created after the Policy is enabled.

Navigate to Administration > Policies

Select + ADD

Select TYPE Power Schedule

Configure the Power Schedule Policy:

- NAME

Name of the Policy

- DESCRIPTION

Add details about your Policy for reference in the Policies tab.

- Enabled

Policies can be edited and disabled or enabled at any time. Disabling a Power Schedule Policy will prevent the Power Schedule from running on the Clouds Instances until re-enabled.

- ENFORCEMENT TYPE

User Configurable: Power Schedule choice is editable by User during provisioning.

Fixed Schedule: User cannot change Power Schedule setting during provisioning.

- POWER SCHEDULE

Select Power Schedule to use in the Policy. Power schedule can be added in Library > Automation > Power Scheduling

- SCOPE

- Global

Applies to all Instances created while the Policy is enabled

- Group

Applies to all Instances created in or moved into specified Group while the Policy is enabled

- Cloud

Applies to all Instances created in specified Cloud while the Policy is enabled

- User

Applies to all Instances created by specified User while the Policy is enabled

- Role

Applies to all Instances created by Users with specified Role while the Policy is enabled

- Permissions- TENANTS

Leave blank to apply to all Tenants, or search for and select Tenants to enforce the Policy on specific Tenants.

Select SAVE CHANGES

Execute Scheduling¶

Execute Scheduling creates time schedules for Jobs, including Task, Workflow and Backup Jobs. Jobs, which are discussed in greater detail in another section of HPE Morpheus Enterprise docs, combine either a Task or Workflow with an Execute Schedule to run the selected Task or Workflow at the needed time. Backup Jobs are a special type of Job configured in the Backups section which also use Execute Schedules to time backup runs as needed.

Schedules use CRON expressions, such as 0 23 * * 2 equalling Executes every week on Tuesday at 23:00. CRON expressions can easily be created by clicking the corresponding translation in the create or edit Execution Schedule modal below the Schedule field and selecting a new value.

Note

Execute Schedules CRON expressions should not include seconds or years. The days of the week should be numbered 1-7, beginning with Monday and ending with Sunday. SUN-SAT notation may also be used. For more on writing CRON expressions, many guides are hosted on the Internet including this one. HPE Morpheus Enterprise execution schedules support most cron syntax but certain more complex expressions may fail to evaluate and the execute schedule will not save. Additionally, some complex expressions may save and work correctly while the friendly written evaluation below the SCHEDULE field is not interpreted correctly. This is due to an issue with the underlying library used to build this feature and cannot easily be resolved at this time.

Create Execution Schedules¶

- NAME

Name of the Execution Schedule

Note

When assigning Execution Schedules, the name value will appear in the selection drop-down. Using a name that references the time interval is often helpful

- DESCRIPTION

Description of the Execution Schedule for reference in the Execution Schedules list

- VISIBILITY

Master Tenant administrators may share Execute Schedules with Subtenants by setting the visibility to Public

- TIME ZONE

The time zone for execution

- Enabled

Check to enable the schedule. Uncheck to disable all associated executions and remove the schedule as an option for Jobs in the future

- SCHEDULE

Enter CRON expression for the Execution Schedule, for example

0 0 * * *equalsEvery day at 00:00- SCHEDULE TRANSLATION

The entered CRON schedule is translated below the SCHEDULE field. Highlighted values can be updated by selecting the value, and relevant options will be presented. The CRON expression will automatically be updated